Disclaimer: Fight the New Drug is a non-religious and non-legislative awareness and education organization. Some of the issues discussed in the following article are legislatively-affiliated. Though our organization is non-legislative, we fully support the regulation of already illegal forms of pornography and sexual exploitation, and we support the fight against sex trafficking.

Recently, MindGeek executives came before Canada’s federal ethics committee and answered questions from members of parliament about claims that the company hosts and profits from nonconsensual content and child sexual abuse material (CSAM).

MindGeek is the parent company of some of the biggest websites and companies in the porn industry, including Pornhub, YouPorn, and RedTube, and more, and has faced media and public scrutiny after the publication of award-winning journalist Nicholas Kristof’s article in The New York Times in December last year. As a result, MindGeek announced dramatic changes, including suspending all content from unverified users and prohibiting downloads from Pornhub. MindGeek has been criticized for appearing to make these changes not out of concern for victims of sexual abuse but in fear of losing Visa and Mastercards’ services.

Statements from MindGeek have reportedly previously called the accusations of sexual exploitation “conspiracy theories” or allegedly said they were “irresponsible and flagrantly untrue” and denied the existence of abusive material on their adult sites. Yet several outlets, including us, have confirmed instances of sexual abuse and underage material on Pornhub.

Feras Antoon, CEO of MindGeek Canada, and David Tassillo, Chief Operations Officer, answered questions from Canadian MPs on Friday, February 5th, and stated that they would like to “stand behind what people see on the site,” [source quote] because those are their real words and actions. In the moment, it felt like a condemning phrase for a company being investigated for exactly that: the illegal content found on their sites.

At the conclusion of the two-hour meeting, it was clear the MPs were disappointed with the responses from Antoon and Tassillo, to say the least. The committee compiled an extensive list of documents they have the legal right to request from MindGeek, including tax records and reports on the number of content removal requests by victims. They are expected to release a report soon, which could be the first time outsiders get a real peek inside the largest porn company in the world.

To view the entire transcript of the hearing, click here.

Reported lies

Throughout the long but very eventful Q&A session, Antoon and Tassillo stayed close to their messaging about MindGeek having zero tolerance for nonconsensual content and child sexual abuse material (CSAM). [source quote] That statement and other claims made are concerning and likely mischaracterizations, and so we compiled a list of claims made to the Canadian government that are not completely true.

Here are nine statements made during the session, and our fact-check of them. Note that some of these claims are not verbatim, but summarized in a short statement. To see the transcript source of the claims made during the hearing, click on where it says “[source quote].”

1. Claim: MindGeek does not profit from nonconsensual or underage content. [source quote]

During the hearing, the executives revealed that roughly 50 percent of MindGeek’s revenues come from advertisements on their sites. [source quote] Their claim of not profiting from illicit material would mean there would be no ads positioned on the same page as such videos; however, it has been reported in the past that ads have been present next to abusive content. When pressed, Antoon or Tassillo said they did not know if MindGeek has received money from specific cases of nonconsensual content. [source quote]

Note that, currently, 40 trafficking and exploitation survivors identified as “Jane Doe” filed a lawsuit against MindGeek in December 2020 for allegedly knowingly profiting from images and videos of their sex trafficking nightmares and failing to properly moderate MindGeek-owned sites for the abusive videos.

2. Claim: Nonconsensual content is against Pornhub’s business model. [source quote]

Both executives rejected the idea that Pornhub’s business model supports nonconsensual content and instead pointed out that the average user is not looking for illegal content and will leave the site if they believe Pornhub is hosting it, costing them ad revenue in long-run. [source quote]

This latter claim is accurate for many users who have lost trust in Pornhub, but the nature of the porn tube sites MindGeek pioneered was reportedly built from stolen videos and images. It was only a few months ago that Pornhub was fully allowing users to upload whatever they wanted without being verified to do so. Some of these uploads have been proven to be nonconsensual—from videos of trafficking victims to underage victims being abused by family members.

It can be reasoned that with each video upload, the site will likely profit because it increases the number of pages where ads can be run. [source quote]

3. Claim: When content is removed, it cannot be immediately reuploaded. [source quote] [source quote]

The posting of CSAM or sharing nonconsensual content is made worse by downloads and reuploads. A single post quickly becomes a nightmarish game of whack-a-mole, and victims spend hours submitting take down requests only to see the same image reposted later.

MindGeek prohibited content downloads on Pornhub in December 2020, and they also made it so only verified site users can upload content. The technologies used to ideally prevent content from being reuploaded to Pornhub, though, refers victims to a third-party fingerprinting software. [source quote] An investigation by VICE revealed minor edits to a fingerprinted video could bypass the safeguards and end up on Pornhub again. The answer is yes, content can reportedly be reuploaded if it’s altered and uploaded by a verified user.

4. Claim: Every piece of content is reviewed before being available to view on Pornhub or other MindGeek-owned sites. [source quote] [source quote]

When asked about content reviewing, Tassillo said, “I can guarantee you that every piece of content, before it’s actually made available on the website, goes through several different filters… The way we do it, irrespective of the amount of content, is that the content will not go live unless a human moderator views it. I want to assure the panel of that.” [source quote] [source quote]

This is a seemingly impossible feat, and the math just doesn’t add up. From the hearing, we know MindGeek employs 1,800 people, [source quote] but we don’t know how many are human moderators. It doesn’t seem like the number was clarified in the hearing. Kristof’s NYT article suggested 80, and others have unofficially estimated much less. For this exercise, let’s be generous and say there are 80 moderators (or “compliance agents” as MindGeek calls them [source quote]) working 40 hours a week for 52 weeks of the year. This would be 166,400 hours of work time dedicated to reviewing content for all moderators, or 2,080 working hours available per moderator every year.

By Pornhub’s own reports, 1.39 million hours of new content was uploaded in 2019 (with possibly more uploaded in 2020), which would require over 17,000 hours of reviewing work a year for each moderator, if there were 80 moderators. Do Pornhub moderators have more hours in a day than the average human, we wonder? Perhaps MindGeek has many, many more full-time human moderators than previously stated, but even so, how many more would be necessary so that every single piece of content is reviewed as claimed in the hearing and why wouldn’t they clarify this?

Tassillo agreed during the hearing that when you put it into “linear math,” reviewing every video sounds impossible, but he still claims they manage to do it. [source quote] He explained that there are different “buckets” of content, so when content from professional studios in the US is uploaded, MindGeek’s moderators don’t have to review the video as carefully [source quote] because it has been vetted during production. But note that abuse and nonconsensual activity still happen on mainstream studio sets.

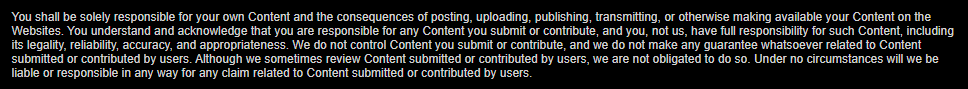

To further emphasize how seemingly unlikely this claim is that every piece of content is allegedly reviewed, Pornhub’s own terms of service, updated on December 8th, 2020, states: “Although we sometimes review Content submitted or contributed by users, we are not obligated to do so.” Emphasis on the sometimes.

Here’s a screenshot of their terms of service:

5. Claim: Pornhub moderators are told to err on the side of caution. [source quote]

To assure MPs that Pornhub’s human moderation is effective, Tassillo said they train their moderators to reject content if they have any doubt about whether it is underage, nonconsensual, or in any other way inappropriate. [source quote] This is directly contrary to a quote from one former MindGeek employee, who said the goal is to “let as much content as possible go through.”

Also, if the claims that the moderators “err on the side of caution” are true, wouldn’t that be admitting direct culpability for letting CSAM through? [source quote]

6. Claim: In porn, the search term “teen” does not mean underage, it actually refers to adults aged 18-27. [source quote]

This was an odd claim by Tassillo. He explained that when most people hear the word “teen” they mean 13-19 years old, but in the adult world “teen” actually means 18-27. [source quote] There is some evidence that “teen” is understood in the industry as legal adult performers who look young, but it also is a clever way to try to spin a controversial “genre” as ethical. We do not believe that the average porn consumer thinks of 18-27 when searching for “teen” porn.

Note that while Pornhub does list “teen” porn as “Teen (18+),” much of the storylines included in “teen” porn reportedly fantasize underage sexual encounters. Tassillo explained that search terms are controlled on Pornhub [source quote] by saying, “If someone even does a search on our site looking for ’14’ or ’15,’ obviously no results are found.” But the issue is that there are innumerable ways around this particular restraint.

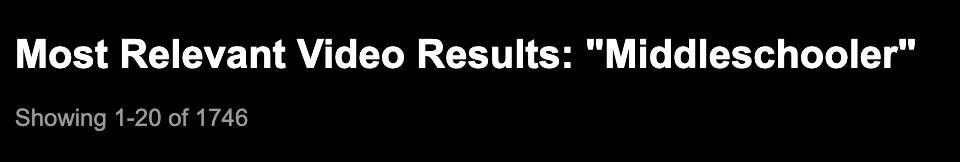

When a committee member mentioned that there are videos on Pornhub under the term “middle schooler,” Tassillo said, “There shouldn’t be any of those videos. From my understanding at this current point, it is a word that’s on the banned list.” [source quote]

Apparently, a banned list is not an ironclad solution. A search on Pornhub in February 2021 for “middleschooler,” yielded over 1,700 results:

Related: How The Porn Industry Quietly Fought To Stop Keeping Official Records Of Performers’ Ages—And Won

7. Claim: MindGeek is a leader among social media and adult platforms in preventing and removing nonconsensual material. [source quote]

The porn company leans into their self-proclaimed title of “leader,” and pats themselves on the back for reporting illicit content to the National Center for Missing and Exploited Children (NCMEC). [source quote] This arrangement is the standard expected for tech companies, but being a leader would suggest MindGeek was among the first to report to NCMEC, when in reality this policy is reportedly as new as 2020—a detail much less advertised during the hearing. [source quote]

We do not yet know how many reports MindGeek made in 2020, and MindGeek officials could not confirm that any reports were made in 2019, [source quote] but we do know that a big number would suggest they are making genuine efforts to report content. For example, Facebook made over 15 million reports to NCMEC in 2019. This is by far the largest number of reports from a tech company, which does not necessarily mean there is more abusive content on Facebook than Twitter, as an example, but that they are likely doing a better job at identifying and reporting.

A member of the Ethics Committee said that his research indicated that MindGeek made no reports to NCMEC in 2019. [source quote] If they’re such a leader among adult platforms, why couldn’t and didn’t the MindGeek representatives clearly say how many reports of CSAM were made to NCMEC in any year, including 2019, or 2020? [source quote]

8. Claim: There is “zero child sexual abuse material (CSAM)” on any MindGeek site. [source quote]

At the hearing on February 5th, Antoon said, MindGeek “should have zero child sexual abuse material on our websites.” [source quote]

Prior to December 2020, CSAM was, unfortunately, findable on MindGeek sites according to our research and according to Kristof’s New York Times article. MindGeek asserts this claim of “zero CSAM” so boldly and confidently likely because of the drastic steps they’ve only very recently taken, one being suspending content uploaded by unverified users. By suspending all content by unverified users, MindGeek likely removed most of this material, but it reportedly cannot be guaranteed they removed all of it. Consider the case of a verified Pornhub account uploading almost 60 videos of an underage sex trafficked teen from Florida. Also, consider how experts have claimed that it is unlikely that sites that accept user-uploaded content—even if they are verified users—have been able to completely prevent or eradicate CSAM.

Even still, they cannot support this claim completely because of the lack of other steps they’ve taken to close the loopholes in their system. Consider the other pieces of information revealed in this hearing: they do not automatically check the secondary ID of a performer in a video, [source quote] only the uploader, and it is reportedly impossible for their moderation team to carefully review every single piece of content on the site though they maintain they manage it. [source quote] Those two factors alone make this claim of MindGeek’s difficult to support.

In addition to that, it has been documented that site users will not necessarily immediately report CSAM wherever it is on the site. Here are two prominent, widely reported-on and separate examples of this: by the time underage abuse survivors Serena Fleites and Rose Kalemba‘s nonconsensually uploaded CSAM were removed from Pornhub at their pleas, videos of their violations had reportedly amassed hundreds of thousands of views, each. If the moderation team does not catch every piece of CSAM, and site users cannot be relied upon to report it, the claim that there is “zero child sexual abuse material (CSAM)” on any MindGeek site rings hollow.

Related: Visa And Mastercard Sever Ties With Pornhub Due To Abusive Content On The Site

Lies of omission

There were allegedly outright false statements, and then there were reportedly lies of omission by the MindGeek executives during the meeting. They appeared to arrive with little preparation or documentation, being unable to quote key facts about how the business operates and how they respond to victims. [source quote] Antoon and Tassillo said their inability to accurately respond does not mean MindGeek does not have the information, but that they simply could not recall “from the tops of their heads.” [source quote] [source quote] [source quote]

Here are a few statements made about claims of CSAM that are very basic facts about the site, or widely publicized issues that they claimed to have no knowledge of.

1. MindGeek executives claim they are not aware of the specific content removal requests and attempts by two victims (Serena Fleites and Rose Kalemba) to have their underage, nonconsensual videos removed from Pornhub, despite both victims having national and international coverage within the last year. [source quote] [source quote] [source quote] [source quote] [source quote]

2. MindGeek claims they are not aware of how many victims have submitted content removal requests for nonconsensual content in any given year, specifically 2019 or 2020. [source quote]

3. MindGeek claims they are unsure if they have reported specific instances of abuse to the police, as required by Canadian law. [source quote] [source quote] The executives say they report to NCMEC, but note that this policy only began in 2020. [source quote] [source quote]

4. MindGeek did not state that they have or plan to apologize to abuse victims. [source quote] They have no stated intention of compensating victims. [source quote] [source quote] [source quote] [source quote] They could not provide information about how much money they’ve paid in legal settlements. [source quote]

5. MindGeek claims their content uploading system is sophisticated and thorough enough to prevent CSAM from appearing on their sites, [source quote] [source quote] but there are clear, significant, demonstrable gaps in the process as we mentioned. Specifically, MindGeek only requires identification for the verified account user, not a secondary performer in an uploaded video. [source quote] This is problematic as seen in the case of a trafficked teen who was discovered in videos on Pornhub that were uploaded by a verified user. [source quote] [source quote] According to MindGeek’s current, updated process, they would not request the identification of the secondary performer or confirm consent unless there was reason to believe they are under duress or underage, [source quote] meaning the same kind of abuse experienced by that teen could possibly happen again.

Related: Why Pornhub Removed Over Half Of The Site’s Content In A Purge Of Unverified Videos

What comes next

MindGeek is currently facing two class-action lawsuits, a $600 million suit in Canada on behalf of victims as far back as 2007 and another in the US. The plaintiff from the Canada case was reportedly notified of a video of herself being abused on Pornhub as recently as 2019 and requested its removal in 2020, but only ever received an automated response. The two Jane Does in the US suit are survivors of child sex trafficking, and videos of their abuse were reportedly uploaded to MindGeek sites who allegedly profited from their specific videos. The suit claims MindGeek at no point attempted to verify the identification or age of the victims, which checks out with our fifth point of omission that the porn company does not automatically vet secondary performers. In these cases, MindGeek reportedly missed opportunities to prevent sexual exploitation.

While MindGeek has made dramatic changes since facing the wave of criticism that began in the last month of 2020 it remains clear from the executives’ statements that the company is apparently unwilling to accept responsibility for the part they played in many abuses that have severely impacted the lives of victims.

As the public eye focuses on MindGeek’s next move, the executives made one key truthful statement: “The problem of nonconsensual content and CSAM is bigger than just” Pornhub. [source quote]

While this may have been said to deflect anger directed at MindGeek, it serves as a reminder to the rest of us that there are other sites in the adult industry with fewer restrictions and even less media attention. While we must hold MindGeek accountable, the work to stop abusive content from spreading online is far from over. This is only the beginning.

Your Support Matters Now More Than Ever

Most kids today are exposed to porn by the age of 12. By the time they’re teenagers, 75% of boys and 70% of girls have already viewed itRobb, M.B., & Mann, S. (2023). Teens and pornography. San Francisco, CA: Common Sense.Copy —often before they’ve had a single healthy conversation about it.

Even more concerning: over half of boys and nearly 40% of girls believe porn is a realistic depiction of sexMartellozzo, E., Monaghan, A., Adler, J. R., Davidson, J., Leyva, R., & Horvath, M. A. H. (2016). “I wasn’t sure it was normal to watch it”: A quantitative and qualitative examination of the impact of online pornography on the values, attitudes, beliefs and behaviours of children and young people. Middlesex University, NSPCC, & Office of the Children’s Commissioner.Copy . And among teens who have seen porn, more than 79% of teens use it to learn how to have sexRobb, M.B., & Mann, S. (2023). Teens and pornography. San Francisco, CA: Common Sense.Copy . That means millions of young people are getting sex ed from violent, degrading content, which becomes their baseline understanding of intimacy. Out of the most popular porn, 33%-88% of videos contain physical aggression and nonconsensual violence-related themesFritz, N., Malic, V., Paul, B., & Zhou, Y. (2020). A descriptive analysis of the types, targets, and relative frequency of aggression in mainstream pornography. Archives of Sexual Behavior, 49(8), 3041-3053. doi:10.1007/s10508-020-01773-0Copy Bridges et al., 2010, “Aggression and Sexual Behavior in Best-Selling Pornography Videos: A Content Analysis,” Violence Against Women.Copy .

From increasing rates of loneliness, depression, and self-doubt, to distorted views of sex, reduced relationship satisfaction, and riskier sexual behavior among teens, porn is impacting individuals, relationships, and society worldwideFight the New Drug. (2024, May). Get the Facts (Series of web articles). Fight the New Drug.Copy .

This is why Fight the New Drug exists—but we can’t do it without you.

Your donation directly fuels the creation of new educational resources, including our awareness-raising videos, podcasts, research-driven articles, engaging school presentations, and digital tools that reach youth where they are: online and in school. It equips individuals, parents, educators, and youth with trustworthy resources to start the conversation.

Will you join us? We’re grateful for whatever you can give—but a recurring donation makes the biggest difference. Every dollar directly supports our vital work, and every individual we reach decreases sexual exploitation. Let’s fight for real love: