Disclaimer: Fight the New Drug is a non-religious and non-legislative awareness and education organization. Some of the issues discussed in the following article are legislatively-affiliated. Including links and discussions about these legislative matters does not constitute an endorsement by Fight the New Drug. Though our organization is non-legislative, we fully support the regulation of already illegal forms of pornography and sexual exploitation, including the fight against sex trafficking.

It’s not uncommon to hear about big tech companies struggling to deal with unwanted or illegal content on their platforms these days.

Moderating online content for violence and sexual abuse like child sexual abuse material—commonly known as “child pornography”—is a challenge that’s tough to solve completely.

Popular information-sharing social platform, Reddit, has also faced this problem. In April 2021, a young woman filed a class-action lawsuit against Reddit claiming that, “Reddit knowingly benefits from lax enforcement of its content policies, including for child pornography.”

What happened?

This woman, going by the pseudonym Jane Doe, accused Reddit of distributing child sexual abuse material (CSAM) of her, stating that in 2019, an abusive ex-boyfriend started posting sexual photos and videos that were taken of her without her consent while she was still only 16 years old.

It’s important to note that, independently of consent, the fact that she was under 18 when these photos were taken and subsequently shared still means they are CSAM.

When the woman reported the incidents to Reddit moderators, she reportedly had to wait “several days” for the content to be taken down, while the ex-boyfriend continued to open up new accounts and repost the images and videos despite his old account being banned.

According to the lawsuit, Reddit’s “[refusal] to help” has meant that it’s fallen “to Jane Doe to monitor no less than 36 subreddits—that she knows of— which Reddit allowed her ex-boyfriend to repeatedly use to repeatedly post child pornography.”

The lawsuit also states that all of this has caused, “Ms. Doe great anxiety, distress, and sleeplessness…resulting in withdrawing from school and seeking therapy,” something common among other victims of image-based sexual abuse.

Reddit responded with a spokesperson, stating to The Verge:

“CSAM has no place on the Reddit platform. We actively maintain policies and procedures that don’t just follow the law, but go above and beyond it. We deploy both automated tools and human intelligence to proactively detect and prevent the dissemination of CSAM material. When we find such material, we purge it and permanently ban the user from accessing Reddit.”

However, many argue that these efforts are insufficient, and the class action has gone beyond Jane Doe’s case to include anyone who has had content posted of them on Reddit while they were minors.

Formally, The Verge reports that Reddit is being accused of site content that permits the distribution of CSAM, as well as failing to report CSAM, and violating the Trafficking Victims Protection Act.

Not the first time being accused of CSAM distribution

These claims are very serious, and the legal context in which they are being evaluated is controversial. However, CSAM possession and distribution are acts of abuse, and this is not the first time Reddit finds itself accused of promoting CSAM content.

In fact, the lawsuit states that Reddit itself has admitted that it is aware of the presence of CSAM on its website.

The lawsuit presents a series of examples of questionable content on the site, among which includes several now-removed subreddits with titles referencing “jailbait,” like an infamous forum that was removed in 2011 after media controversy. Per policy, it didn’t allow for nudity, though, according to the Verge’s report, it encouraged “sexually suggestive” content.

What can we do about CSAM?

CSAM, or any form of sexual abuse material for that matter, is clearly harmful and abusive. We sympathize with victims of image-based sexual abuse, like Jane, whose story is, unfortunately, becoming more and more common.

This current dilemma involving Reddit highlights the difficulty, either from inaction or inability, of many platforms, including popular mainstream porn sites, to adequately respond to and remove this illegal and hurtful content. It also reflects how there’s been an unfortunate increase of CSAM on the internet, especially during the COVID-19 pandemic.

Despite this concerning reality, the issue is being addressed in our cultural and legal realm. There’s talk of legislation being passed that addresses the very issue Jane is facing, nonconsensual content distribution. Also, there are a few things we can do to combat this issue:

- Stay informed; know the facts. Through different resources like videos and articles, we can better understand the situation, a necessary starting point for solving any problem.

- Second, and most importantly, is to report nonconsensual content when you see it. Cliche as it may sound, this is one of the best ways to bring to light what seeps through the cracks of even the best technology and platform content moderators. Reddit offers reporting guides for several types of content.

- If you know someone or you yourself have been victimized by this type of abuse, there are also resources that can help.

This is no easy fight, but it’s one that’s worth it. Sexual abuse isn’t sexy, and nonconsensual content should not be normalized. You with us?

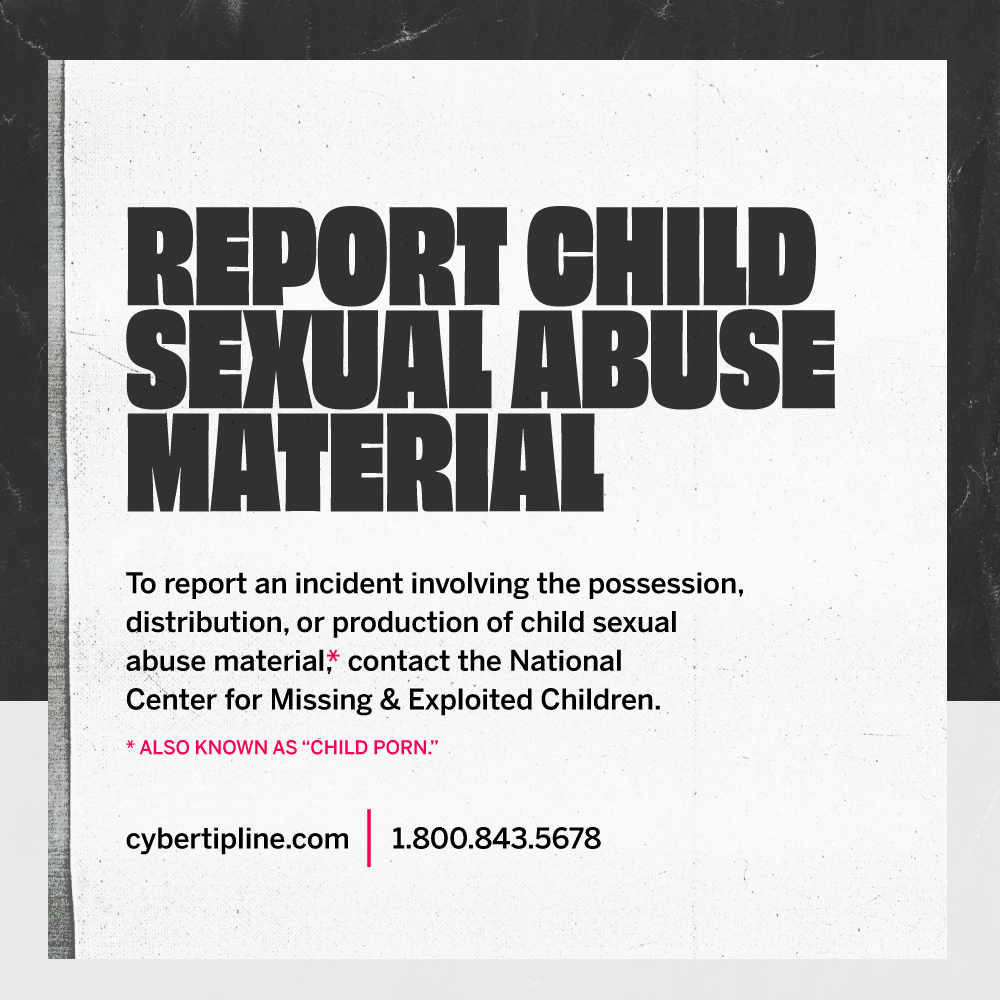

To report an incident involving the possession, distribution, receipt, or production of CSAM, file a report on the National Center for Missing & Exploited Children (NCMEC)’s website at www.cybertipline.com, or call 1-800-843-5678.

Your Support Matters Now More Than Ever

Most kids today are exposed to porn by the age of 12. By the time they’re teenagers, 75% of boys and 70% of girls have already viewed itRobb, M.B., & Mann, S. (2023). Teens and pornography. San Francisco, CA: Common Sense.Copy —often before they’ve had a single healthy conversation about it.

Even more concerning: over half of boys and nearly 40% of girls believe porn is a realistic depiction of sexMartellozzo, E., Monaghan, A., Adler, J. R., Davidson, J., Leyva, R., & Horvath, M. A. H. (2016). “I wasn’t sure it was normal to watch it”: A quantitative and qualitative examination of the impact of online pornography on the values, attitudes, beliefs and behaviours of children and young people. Middlesex University, NSPCC, & Office of the Children’s Commissioner.Copy . And among teens who have seen porn, more than 79% of teens use it to learn how to have sexRobb, M.B., & Mann, S. (2023). Teens and pornography. San Francisco, CA: Common Sense.Copy . That means millions of young people are getting sex ed from violent, degrading content, which becomes their baseline understanding of intimacy. Out of the most popular porn, 33%-88% of videos contain physical aggression and nonconsensual violence-related themesFritz, N., Malic, V., Paul, B., & Zhou, Y. (2020). A descriptive analysis of the types, targets, and relative frequency of aggression in mainstream pornography. Archives of Sexual Behavior, 49(8), 3041-3053. doi:10.1007/s10508-020-01773-0Copy Bridges et al., 2010, “Aggression and Sexual Behavior in Best-Selling Pornography Videos: A Content Analysis,” Violence Against Women.Copy .

From increasing rates of loneliness, depression, and self-doubt, to distorted views of sex, reduced relationship satisfaction, and riskier sexual behavior among teens, porn is impacting individuals, relationships, and society worldwideFight the New Drug. (2024, May). Get the Facts (Series of web articles). Fight the New Drug.Copy .

This is why Fight the New Drug exists—but we can’t do it without you.

Your donation directly fuels the creation of new educational resources, including our awareness-raising videos, podcasts, research-driven articles, engaging school presentations, and digital tools that reach youth where they are: online and in school. It equips individuals, parents, educators, and youth with trustworthy resources to start the conversation.

Will you join us? We’re grateful for whatever you can give—but a recurring donation makes the biggest difference. Every dollar directly supports our vital work, and every individual we reach decreases sexual exploitation. Let’s fight for real love: