How would you feel if we told you that someone could pull a fully dressed, digitized photo of you from the internet and easily undress it in seconds—all without your permission?

And in addition to that, they could share a link of that now nude photo of you with anyone in the world: your crush, your mom, your grandmother, your whole school, among others?

If deepfake survivor history is any indication, our guess is that someone committing that kind of image-based sexual abuse would feel traumatizing to anyone and could lead to a whole host of severe and disruptive mental health effects.

Here’s the worst part, though: this deepfake technology exists today and is being used on everyday people—specifically, numerous women.

What is a “deepfake?”

Before we discuss the disturbing technology we described above, let’s talk about deepfakes.

Deepfakes are a digital phenomenon that has emerged over the last few years. A “deepfake” is a digital manipulation of a real person’s face or voice, created using deep learning technology which “learns” from existing data, such as photos and videos of that person.

The data can be used to manufacture fake audio, images, or videos of someone, making them appear to do or say something they might never say in real life.

In this YouTube video, there are a couple examples of deepfakes—you’ll see Elon Musk’s face replacing Charlie Sheen’s, Mike Tyson’s face replacing Oprah’s, and Kevin Hart’s face superimposed on Mr. T’s, among a number of other examples.

The video exhibits the fun and relatively harmless side of deepfakes, but the fact of the matter is that when it comes to deepfakes, there are serious ethical concerns. When researchers at AI cybersecurity company Deeptrace did a search of the internet in 2019, they found that of the almost 15,000 deepfakes online, 96% are pornographic.

Meaning, many individuals have been nonconsensually featured in thousands of sexually explicit videos and images.

And that’s in line with the deepfake technology that’s newly making the rounds and causing concern.

The deepfake tool undressing thousands

In 2020, a deepfake website launched that had developed its own “state of the art” deep-learning image translation algorithms to “nudify” female bodies.

In other words, any user can upload any photo of a dressed female or female-presenting subject and the site will undress the photo for the user.

We’re not going to name it here or include any identifying information that could lead someone to the site.

According to HuffPost, when someone uploads female and female-presenting subjects, the results are strikingly realistic, often bearing no glitches or visual clues that the photos are deepfakes. While the site claims not to save any uploaded or “nudified” photos, it does provide the person uploading with a link to their nude photo that can be shared with anyone.

As of this summer, the site has already gotten more than 38 million visits in 2021.

And given the “referral” program through which users can publicly share a personalized link and earn rewards for each new person who clicks on it, it wouldn’t be surprising to see that number climb even higher.

Why does this tool exist and is anyone trying to address it?

It’s clear this kind of site can cause immeasurable harm to anyone depicted—namely women in this case—so how is it possible that it’s still up and running? Is anyone trying to do something about it?

The first question is easy to answer: demand. The millions and millions of hits the site has received in a matter of months show that people desire to use the site.

And where there’s demand, there’s money. Site users are normally only able to “nudify” one picture for free every two hours. Alternatively, they can pay a cryptocurrency fee to skip the wait time for a specified number of additional photos.

The second question is a bit more difficult to answer. When HuffPost reached out to its original web host provider, the site went offline briefly because the web host provider terminated its hosting services. However, the site was back up less than a day later with a new host.

It’s currently unknown who operates the site—operators didn’t respond to multiple interview requests from multiple media outlets.

Regardless of who the creators are, it’s clear who the consumers are: the U.S. has reportedly been by far the site’s leading source of traffic.

U.S. lawmakers have shown little concern about abusive deepfakes outside of their ability to cause political issues. Social media companies have also shown less concern, being slow to respond to complaints about nonconsensual pornographic content (real or fake) that they tend to face no liability for.

Unfortunately, United Kingdom-based deepfake expert Henry Ajder calls the situation “really, really bleak” because the tool’s “realism has improved massively” and because the “vast majority of people using these [tools] want to target people they know.”

Weaponizing deepfake technology against celebrities and influencers has victimized many—and now, everyday women have become targets in unthinkable ways.

Why this matters

What it comes down to is this: pornographic deepfakes are nonconsensual porn.

Deepfake pictures and videos are being created without the consent, knowledge, and input of the victim. The victim has no control over what they appear to do or say, and who sees and shares the content. This is a very serious offense.

Victims of image-based sexual abuse like nonconsensual porn, such as deepfakes, face catastrophic emotional consequences. According to one qualitative study of revenge porn survivors, study participants experienced many disruptive mental health issues after victimization that affected their daily lives. Participants generally engaged in negative coping mechanisms, such as denial and self-medicating, closer to when they were victimized.

Nearly all participants experienced a general loss of trust in others. Many participants also experienced more severe and disruptive mental health effects. Inductive analysis revealed posttraumatic stress disorder (PTSD), anxiety, depression, suicidal thoughts, and several other mental health effects.

Studies like the above clearly exhibit that deepfakes are degrading, humiliating, and potentially life-ruining—there is no place for websites that make them.

Click here to learn what you can do if you’re a victim of revenge porn or deepfakes porn.

Your Support Matters Now More Than Ever

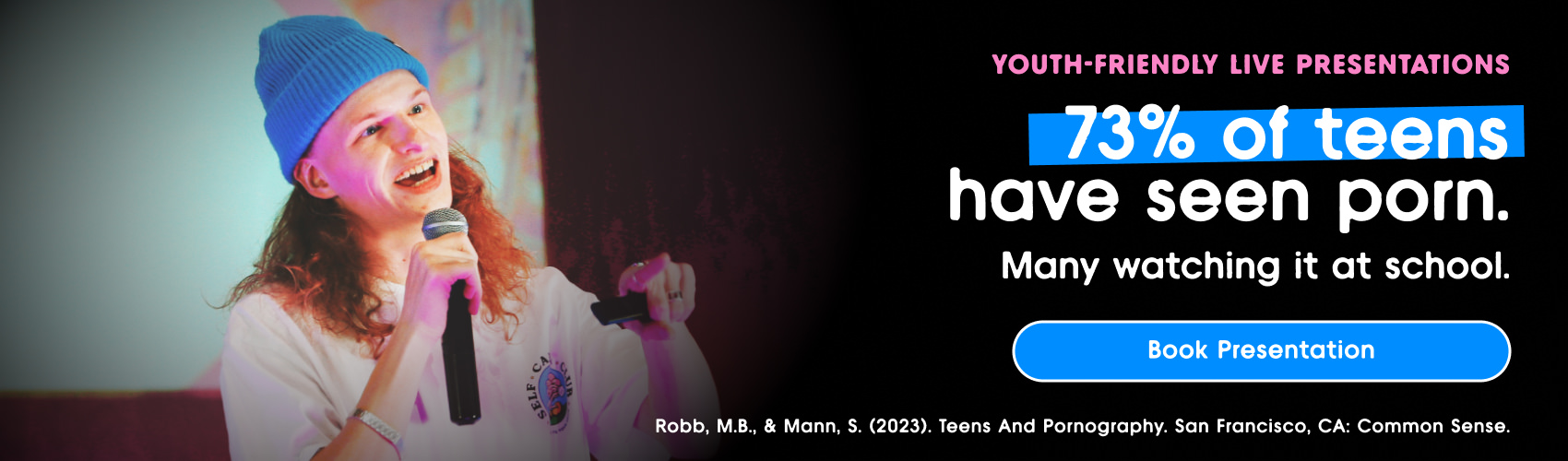

Most kids today are exposed to porn by the age of 12. By the time they’re teenagers, 75% of boys and 70% of girls have already viewed itRobb, M.B., & Mann, S. (2023). Teens and pornography. San Francisco, CA: Common Sense.Copy —often before they’ve had a single healthy conversation about it.

Even more concerning: over half of boys and nearly 40% of girls believe porn is a realistic depiction of sexMartellozzo, E., Monaghan, A., Adler, J. R., Davidson, J., Leyva, R., & Horvath, M. A. H. (2016). “I wasn’t sure it was normal to watch it”: A quantitative and qualitative examination of the impact of online pornography on the values, attitudes, beliefs and behaviours of children and young people. Middlesex University, NSPCC, & Office of the Children’s Commissioner.Copy . And among teens who have seen porn, more than 79% of teens use it to learn how to have sexRobb, M.B., & Mann, S. (2023). Teens and pornography. San Francisco, CA: Common Sense.Copy . That means millions of young people are getting sex ed from violent, degrading content, which becomes their baseline understanding of intimacy. Out of the most popular porn, 33%-88% of videos contain physical aggression and nonconsensual violence-related themesFritz, N., Malic, V., Paul, B., & Zhou, Y. (2020). A descriptive analysis of the types, targets, and relative frequency of aggression in mainstream pornography. Archives of Sexual Behavior, 49(8), 3041-3053. doi:10.1007/s10508-020-01773-0Copy Bridges et al., 2010, “Aggression and Sexual Behavior in Best-Selling Pornography Videos: A Content Analysis,” Violence Against Women.Copy .

From increasing rates of loneliness, depression, and self-doubt, to distorted views of sex, reduced relationship satisfaction, and riskier sexual behavior among teens, porn is impacting individuals, relationships, and society worldwideFight the New Drug. (2024, May). Get the Facts (Series of web articles). Fight the New Drug.Copy .

This is why Fight the New Drug exists—but we can’t do it without you.

Your donation directly fuels the creation of new educational resources, including our awareness-raising videos, podcasts, research-driven articles, engaging school presentations, and digital tools that reach youth where they are: online and in school. It equips individuals, parents, educators, and youth with trustworthy resources to start the conversation.

Will you join us? We’re grateful for whatever you can give—but a recurring donation makes the biggest difference. Every dollar directly supports our vital work, and every individual we reach decreases sexual exploitation. Let’s fight for real love: