Every year, the D.C.-based nonprofit, the National Center of Sexual Exploitation (NCOSE), releases a “Dirty Dozen” list. This list calls out twelve mainstream companies profiting from sexual exploitation in some way.

Over the years, the “Dirty Dozen” list has driven impactful change at major companies, including Google, Netflix, TikTok, and more. Publicly acknowledging these organizations’ connections to sexual exploitation and sharing ways the public can make a difference has prompted companies to change. They’ve revised their policies and practices accordingly.

Since last year’s 2023 “Dirty Dozen” list, Snapchat has improved its ability to detect and remove sexually explicit content by implementing new product safeguards. Kik added age requirements, an option to block direct messages from strangers, and bots to detect nudity in livestreams. Discord added more intense safety settings and policies surrounding child sexual exploitation (more on that later). Apple now automatically blurs nude images for kids aged 12 and under. Reddit is also taking steps by restricting more pornography and prohibiting content that sexualizes children. Instagram even upped its parental control game and started a task force to investigate how facilities spread the sale of child sexual abuse material.

These changes in technology and policies may seem small, but when millions of users interact with content from these platforms, these small changes make a huge difference.

Now, let’s dive into NCOSE’s 2024 “Dirty Dozen” list. Below is just a recap of some of the mainstream contributors of sexual exploitation. Content for this article came from NCOSE’s original post. Check out the list for more info.

Also, before we dive in, there are a few terms you should be familiar with.

- Image-based sexual abuse: the capture, creation, and/or sharing of sexually explicit images without the subject’s knowledge or consent

- CSAM: Child Sexual Abuse Material (formally referred to as child pornography)

Let’s dive in.

2024 Dirty Dozen Companies

Apple

“Apple’s record is rotten when it comes to child protection.” – NCOSE

After making the “Dirty Dozen” list last year, Apple did expand protections by blurring sexually explicit content and filtering out porn for those 12 and under. However, they won’t do the same for teens. According to NCOSE, Apple also decided to scrap its plans to detect child sex abuse material on iCloud.

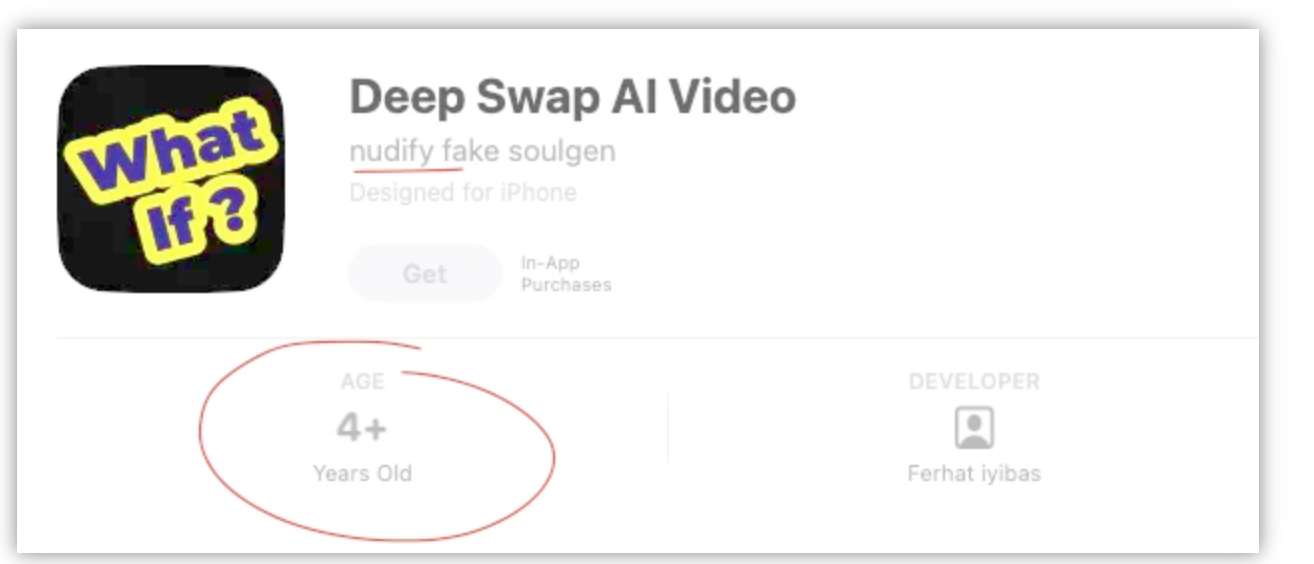

The App Store also hosts apps to create deepfake pornography and other forms of sexual exploitation. A deepfake app even has a rating suitable for users aged four and up. The App Store hosts a variety of other dangerous apps for kids with confusing app age ratings and descriptions. Apple supposedly approves each app in the App Store before it’s made viewable by the public and checks that apps aren’t misleading users or containing “overtly sexual or pornographic material.”

With over 87% of US teens using iPhones, the lack of safety on these devices poses a serious risk to young kids being exposed to dangerous content. NCOSE suggests Apple step up as a tech leader and do more to protect children from sexual exploitation.

Cash App

According to law enforcement, this common payment platform is one of the top platforms for various sexual exploitation crimes. These include the buying and selling of child sexual abuse material (CSAM), prostitution, and sex trafficking.

The app creates an ideal environment for criminal activity. It is anonymous, allows quick transfers, and lacks stringent age and identity verification procedures.

“When it comes to sex trafficking in the U.S., by far the most commonly referenced platform is CashApp…It is probably the most frequently referenced Financial brand in the hotline data.”- “Sara Crowe, senior director at Polaris, said.

CashApp says they don’t accept payment for sex trafficking and other crimes, but the Department of Justice frequently releases indictments and sentencing regarding CashApp allowing the commercial sexual exploitation of minors.

According to a report published by the Stanford Internet Observatory, underaged users will commonly share their $Cashtags in their social media accounts. Predators will then use CashApp to pay minors for sexually explicit content, and on TikTok, minors share their $Cashtags during live streams of themselves undressing or engaging in other sexually explicit acts.

Discord

An increasingly popular messaging and social platform, it’s not only the place for regular users to “Talk and Hang Out” but also a place for grooming and exploitation.

Pedophiles use the app to groom children, obtain CSAM ( the more correct term for child pornography), and share it. It’s also become a magnet for deepfakes and image-based sexual abuse.

While Discord does have parental controls and some safety policies in place, according to NCOSE’s research, those safeguards aren’t doing their job. For example, sexually explicit content is supposed to be blurred by default and include warnings when minors receive messages from unconnected adults; however, NCOSE’s team found this feature didn’t work, and they saw no warnings. Minors can still see pornography on servers and via private messages.

Discord fails to flag grooming behaviors. They also do not monitor live stream video or audio calls for CSAM or exploitation. This lack of oversight is evident.

In a 2024 report by the Protect Children Project, one in three CSAM offenders reported Discord as a top platform to search for, view, and share CSAM. Discord was also the third most-mentioned platform where CSAM offenders tried to contact kids.

Countless news reports demonstrate how predators groom, exploit, and engage children in sextortion through Discord. In February of 2024, a 14-year-old Washington girl was missing for over three weeks and was eventually found with a man who groomed her on Discord. He now faces charges of criminal sexual conduct.

NCOSE recommends that the platform prohibits minors entirely until they clean up the platform and ensure its safety for kids.

GitHub

Microsoft GitHub is “the world’s leading AI-powered developer platform” and one of the leading contributors to image-based sexual abuse technology and AI-generated child sexual abuse material (CSAM).

GitHub currently hosts code and forums dedicated to creating deepfakes and nudify apps. These apps take images of clothed women and ‘strip them’ to develop new nude photos. Additionally, there’s code for creating AI-generated CSAM.

In December 2023, the Stanford Internet Observatory found over 3,200 images of suspected CSAM in the training set of Stable Diffusion, a popular generative AI platform. This dataset was available on GitHub.

On GitHub, users can share sexually exploitative code with others. This contributes significantly to the technology enabling people to develop image-based sexual abuse. GitHub hosts three of the most well-known technologies used for making synthetic sexually explicit material (SSEM) abuse.

GitHub has implemented automated detection to report CSAM. However, it doesn’t scan code for the technology generating or sharing CSAM itself. Consequently, programmers can continue to develop and advance this technology on GitHub without facing any repercussions.

According to NCOSE, GitHub reports CSAM but doesn’t include enough detail in 90% of its filed reports for anything to be done about it.

NCOSE is urging GitHub to moderate its platform, implement basic safety standards, and ban code that fuels sexual exploitation.

For the complete 2024 Dirty Dozen List, visit NCOSE’s official website.

NCOSE has been compiling the “Dirty Dozen” list since 2013. Click below if you’re interested in seeing our write-up of previous years’ lists: