That’s the number of reports of child sexual abuse images online that the UK charity Internet Watch Foundation (IWF) received in 2019.

It also happens to be a 14% increase on the number of reports made in 2018. In other words, as head of Child Safety Online at the National Society for the Prevention of Cruelty to Children Andy Burrows puts it, this “disturbing increase” exhibits clearly that child sexual exploitation imagery—also known as “child pornography—isn’t just a problem, but a “growing” problem.

Of all the reports made to the IWF in 2019, 132,000 showed images or videos of children being sexually abused. Moreover, while each report contained at least one of such images, some reports contained thousands of child sexual abuse images. The IWF says that this means all of the reports it receives “equates to millions of images and videos.”

In response to the IWF’s data, Burrows called out the tech industry in saying that, “We must see the emphasis broaden[ed] from a focus on taking down illegal material to one where social networks and gaming sites are doing much more to disrupt abuse in the first place.”

Thankfully, it seems like one of the tech industry’s most prominent players has taken note of the calls for action. Let’s get the facts.

Apple automatically scans iPhone photos for child sexual exploitation imagery

At the CES 2020 tech show in Las Vegas, Apple’s chief privacy officer, Jane Horvath, revealed that the company was automatically screening images that are backed up to its storage service. According to Horvath, such technologies are being utilized “to help screen for child sexual abuse material.”

A disclaimer on Apple’s website further backs Horvath’s statements:

“Apple is dedicated to protecting children throughout our ecosystem wherever our products are used, and we continue to support innovation in this space. As part of this commitment, Apple uses image-matching technology to help find and report child exploitation.”

Talk about a game-changer in the fight against child abuse images.

So, how does this fancy technology check our phone pictures? Well, we’ll get there, but before we get into the details, we first need to understand the privacy technology that Apple uses to store photos in the first place.

How Apple’s privacy technology works

Apple’s privacy technology is known as end-to-end encryption, and it works with all data uploaded to iCloud. This technology ensures that only you and the person you’re communicating with (if you are sending a picture to someone) can see what’s been uploaded and sent.

To put it another way, end-to-end encryption permits you to take a selfie of your family skiing, store it on your phone as you continue to hit the slopes, and send it to grandma when you get home without anyone else in the world having the ability to see it (assuming nobody knows your iPhone password or magically has the same fingerprint as you).

But that same technology would also permit someone to share a child abuse image that leaves them and their messaging threads anonymous to the rest of the world, basically giving them free rein to record abusing children—or saving images of child abuse—distribute the material to others, and not get caught.

This is clearly what makes Apple’s photo scanning technology so important. However, this technology has also caused some to worry that the use of this technology could be an invasion of privacy.

For example, is Apple simply breaking into our phones to scan all of our photos in the name of saving children?

How Apple’s scanning technology works

According to Paul Bischoff, a privacy advocate for Compareitech.com, the answer to such a question is almost definitely not.

Bischoff thinks that Apple utilizes law enforcement’s database of child abuse photos to do it:

“Apple hashes or encrypts those photos with each users’ security key (password) to create unique signatures. If the signatures of any encrypted photos uploaded from an iPhone match the signatures from the database, then the photo is flagged and presumably reported to authorities.”

Essentially, what this means is that Apple’s screening technology only flags an uploaded photo if the photo matches a child abuse photo in law enforcement’s database. Apple isn’t “simply decrypting a user’s phone or iCloud at the behest of law enforcement, or giving law enforcement a backdoor to decrypt user files.”

This technology, Bischoff continues, “…is more targeted and doesn’t allow for much third-party abuse. It also targets child pornography…”

Thankfully, Apple is not alone in using technology to fight child exploitation.

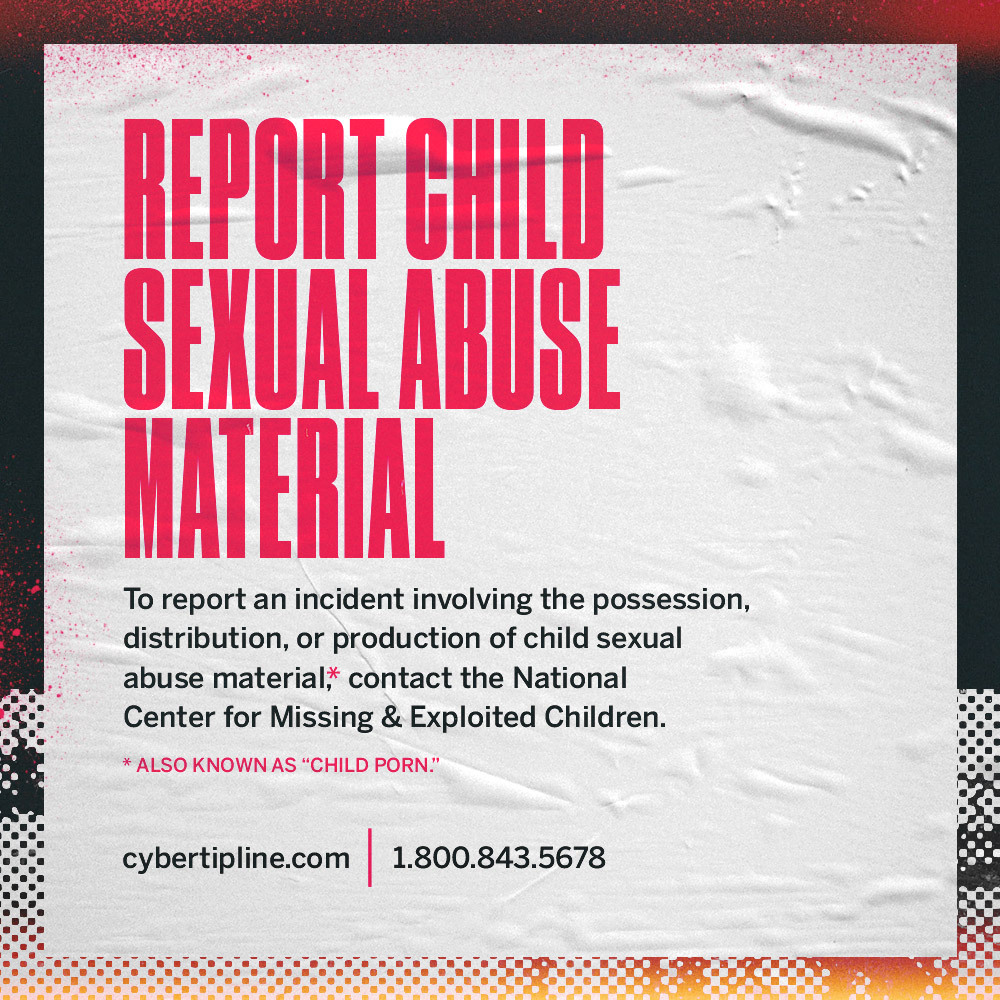

How to report child sexual abuse material if you or someone you know sees it

WhatsApp, the ultra-popular cross-platform messaging and calling app owned by Facebook, also uses their most “advanced technology, including artificial intelligence, to scan profile photos and images in reported content, and actively ban accounts suspected of sharing this vile content.”

And while WhatsApp has stated that “technology companies must work together to stop [the spread of CSAM],” the fight against child exploitation can’t end with tech companies alone.

Related: Uncovering The Child Porn Distribution Epidemic On WhatsApp

If you see something, we encourage you to say something. When you report child porn, you make it possible for a child or children to be saved, and the trafficker(s) who exploits minors to be held accountable.

In terms of how exactly to report child exploitation, check out this previous post of ours.

To report an incident involving the possession, distribution, receipt, or production of child sexual abuse material, file a report on the National Center for Missing & Exploited Children (NCMEC)’s website at www.cybertipline.com, or call 1-800-843-5678.

Your Support Matters Now More Than Ever

Most kids today are exposed to porn by the age of 12. By the time they’re teenagers, 75% of boys and 70% of girls have already viewed itRobb, M.B., & Mann, S. (2023). Teens and pornography. San Francisco, CA: Common Sense.Copy —often before they’ve had a single healthy conversation about it.

Even more concerning: over half of boys and nearly 40% of girls believe porn is a realistic depiction of sexMartellozzo, E., Monaghan, A., Adler, J. R., Davidson, J., Leyva, R., & Horvath, M. A. H. (2016). “I wasn’t sure it was normal to watch it”: A quantitative and qualitative examination of the impact of online pornography on the values, attitudes, beliefs and behaviours of children and young people. Middlesex University, NSPCC, & Office of the Children’s Commissioner.Copy . And among teens who have seen porn, more than 79% of teens use it to learn how to have sexRobb, M.B., & Mann, S. (2023). Teens and pornography. San Francisco, CA: Common Sense.Copy . That means millions of young people are getting sex ed from violent, degrading content, which becomes their baseline understanding of intimacy. Out of the most popular porn, 33%-88% of videos contain physical aggression and nonconsensual violence-related themesFritz, N., Malic, V., Paul, B., & Zhou, Y. (2020). A descriptive analysis of the types, targets, and relative frequency of aggression in mainstream pornography. Archives of Sexual Behavior, 49(8), 3041-3053. doi:10.1007/s10508-020-01773-0Copy Bridges et al., 2010, “Aggression and Sexual Behavior in Best-Selling Pornography Videos: A Content Analysis,” Violence Against Women.Copy .

From increasing rates of loneliness, depression, and self-doubt, to distorted views of sex, reduced relationship satisfaction, and riskier sexual behavior among teens, porn is impacting individuals, relationships, and society worldwideFight the New Drug. (2024, May). Get the Facts (Series of web articles). Fight the New Drug.Copy .

This is why Fight the New Drug exists—but we can’t do it without you.

Your donation directly fuels the creation of new educational resources, including our awareness-raising videos, podcasts, research-driven articles, engaging school presentations, and digital tools that reach youth where they are: online and in school. It equips individuals, parents, educators, and youth with trustworthy resources to start the conversation.

Will you join us? We’re grateful for whatever you can give—but a recurring donation makes the biggest difference. Every dollar directly supports our vital work, and every individual we reach decreases sexual exploitation. Let’s fight for real love: