Digital sexual exploitation just got massively more accessible.

In October of 2020, visual threat intelligence company Sensity published its “Automating Image Abuse” report on a troubling issue: deepfake bots on the encrypted messaging platform Telegram. Not just any bots—these were designed with software meant to take images of women and “strip” them naked within 60 seconds, practically for free.

Sensity (previously called Deeptrace) is a deepfakes-monitoring company, and it is one of the leading companies publishing on the state of affairs of “deepfakes,” the term for manipulated video, audio, or photo content, generally through the use of Artificial Intelligence (AI). It published a report in September 2019, laying out the “landscape” of deepfakes and their content, threats, and impact.

Counter to what many in our culture may claim, their analyses concluded that the biggest threat deepfakes pose isn’t political. Instead, deepfakes’ biggest threat is the exploitation, specifically of women, through the use of their bodies or faces to make deepfake porn. In fact, Sensity’s analysis revealed that 96% of deepfake videos on the internet were of nonconsensual porn.

A new report, but the same news

Unfortunately, we can’t say the results from this new report relay anything better: if anything, they seem to serve as a confirmation of the last one.

Sensity found that there were seven Telegram channels operating a bot designed with “DeepNude” software—a now shut-down website that leveraged AI to create realistic-looking images of naked women. The creators of DeepNude closed shop after a huge inflow of demand, stating that, “the world is not yet ready for DeepNude.” (Will it ever be “ready?”) Its technology, however, was sold anonymously and has been found, “in enhanced forms” on sketchier websites. This leads us to the latest nonconsensual nudes scam.

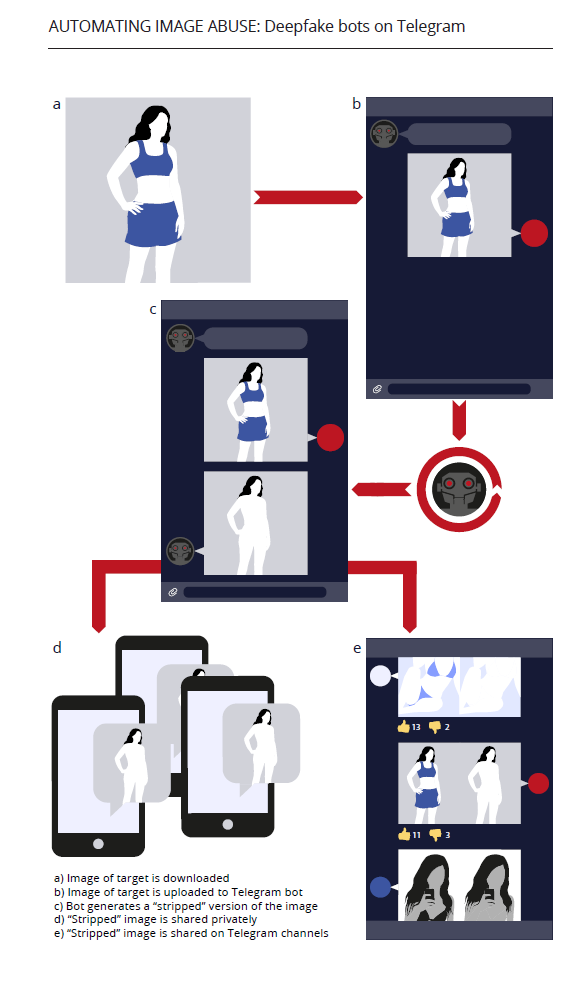

The Telegram scheme works like this: one bot, seven channels, and 103,585 members between them, at least 45,615 of them unique ones. Between these thousands of members, around 104,852 women were found to have been targeted, meaning they had AI-”stripped” images of them shared publicly by July of 2020. This is very concerning, especially considering the “nudes”-creating bot was only first launched July of 2019.

Three months later than July 2020, this number had grown to 680,000 targeted women. All users had to do was upload an image of a woman to the bot (the only types of images it was able to successfully manipulate), and shortly after, it would produce a downloadable, “stripped” image with a watermark that could be removed using some “premium coins” users could purchase for as little as $1.50.

This is Sensity’s depiction of how these images are manipulated and shared:

The most troubling part? Seventy percent of the women were private individuals (non-celebrities) whose images were taken from social media or private communication, likely without their consent, including, “a limited number…who appeared to be underage, suggesting that some users were primarily using the bot to generate and share pedophilic content.”

What do these concerning stats really reveal?

There are a few things this Sensity report highlights:

It’s easier than ever to create digital sexual exploitation…of anyone

First, the ease with which sexual exploitation can be made and sold today, often with no knowledge by the victim, is very concerning. This is because it’s cheaper, faster, and easier to find and use the technology for this malicious manipulation. In fact, Sensity’s founder stated that, “Having a social media account with public photos is enough for anyone to become a target.”

The activity from this Telegram scheme underscores this point of a widening target base, marking a departure from what Sensity’s last report found: that celebrities or stars were the main targets of deepfake porn. This report about what’s happening on Telegram finds that while only 16% of users using the bot were interested in undressing celebrities or stars, 63% responded to a poll stating their intent was to, “target private individuals,” girls who the user knows in real life.

How porn ushers an attitude of “sexual entitlement”

The second thing Sensity’s report hints at is an underlying trend of a “sexual entitlement” mentality, where consumers believe they’re entitled to view and objectify any person without their consent, regardless of if they’re underage or not.

The consequences of this are not insignificant: it brings up red flags around the topic of our culture’s understanding of consent, which studies show is increasingly an issue when it comes to sexual ethics among younger generations. This also shows, on a new level, how porn is used to normalize objectifying and commoditizing individuals.

Content not just for consumption, but extortion and humiliation

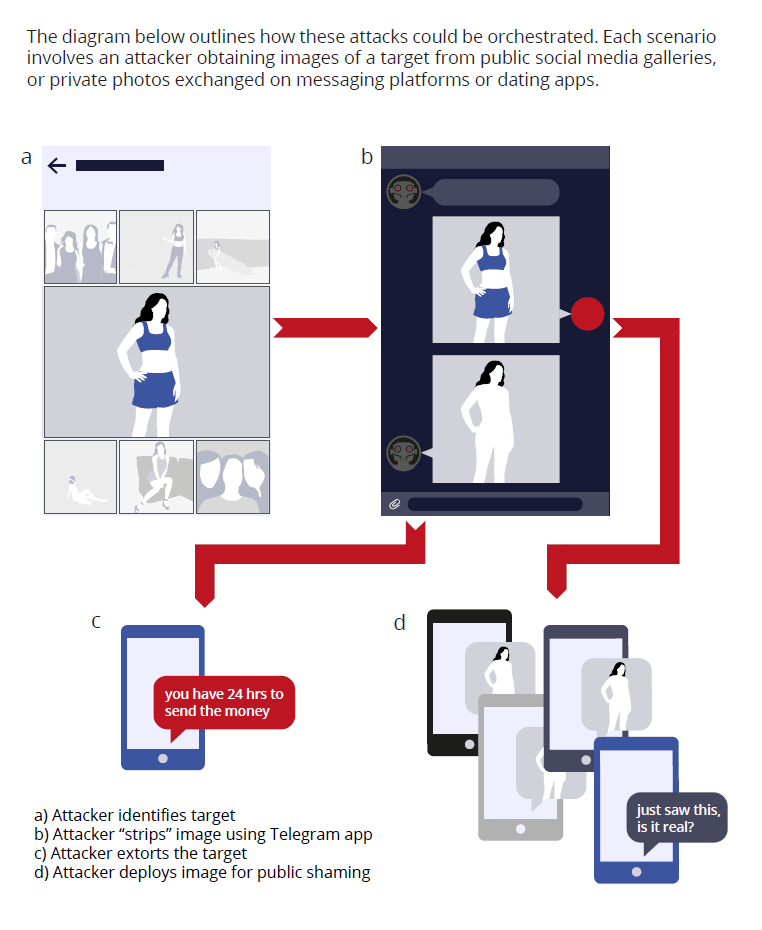

As if that wasn’t concerning enough, the report found deepfake content of these individuals is used as a tool for public humiliation, or even extortion. But this is happening on more than just Telegram. Videos from TikTok, a platform notorious for its use by underage users, have been found manipulated and uploaded to mainstream porn sites. Thousands of deepfake videos have made their way into mainstream porn sites, as well.

These deepfake porn creators can also threaten to share content publicly, or to a victim’s friends or family unless they pay them. This wouldn’t be acceptable even if the content was real, but in these cases, the content used for extortion is fake.

Deepfake porn is uniquely more harmful than political or entertainment-based deepfakes because explicit deepfake content can cause serious harm to the victim even if it’s poor quality. And this is all usually done without the victim knowing they’re being targeted.

Here’s another visual representation of how the Telegram bots operate:

A scary new reality

The disturbing reality this creates is one where any woman—or person of any gender, for that matter—with a social media account is vulnerable to having her face and body being sexually exploited without her knowledge.

It can be shared to her coworkers, friends or family, or among sketchy sites or mainstream porn websites. Her image can be taken and exploited for the sexual gratification of anyone, and then used against her to extort her for money or other form of payment.

As of now, there is little protection legally against these sorts of attacks, and oftentimes the damage in terms of humiliation, reputation, and even in the workplace of women is serious.

Even so, there are steps you can take to defend yourself. If you or someone you know has become a victim of deepfakes, here’s what you can do.