Trigger warning: Trigger warning for descriptions of abuse and sex-related topics

According to deepfake detection company Sensity, this represents the number of deepfakes found on the web as of June 2020—many of which were explicit.

Deepfakes are fake videos or audio recordings made to look and sound like the real thing. Sometimes, a deepfake might include someone editing LeBron James into a video of their pickup basketball game with friends. While still a problem because it includes using someone’s name and likeness without their permission, the issue at hand becomes that much more clear when the video is pornographic.

Now, it’s more common for people to use technology to make porn deepfakes of celebrities, musicians, and actresses rather than deepfake videos that aren’t pornographic.

WIRED, an American magazine exploring the impact of emerging technologies on culture, economics, and politics, recently reported that these explicit videos flood major porn sites monthly. These videos rack up millions of views, and the porn sites that host these videos fail to remove them from their sites.

Wondering how this could be? Let’s get into the details.

Viewers are watching deepfake videos featuring Taylor Swift, Natalie Portman, and other celebrities.

“Even if it’s labeled as, ‘Oh, this is not actually her,’ it’s hard to think about that I’m being exploited,” Hollywood actress Kristen Bell told Vox in June regarding finding out that deepfakes were being made using her image.

Other deepfake videos, which have hundreds of thousands or millions of views, include a list of A-list celebrities such as Natalie Portman, Billie Eilish, Taylor Swift, Anushka Shetty, and Emma Watson. Watson’s video—a 30-second clip featuring her face in a pornographic video—received over 23 million views. And many of these celebrities have been frequent deepfake targets since the trend emerged in 2018.

The fact that the above names are all women is not a coincidence, either. Sensity’s report from last year revealed that 96% of the online deepfake videos in July 2019 were pornographic, predominantly focusing on women.

What are porn sites doing about this?

The quick answer? Not much.

Sensity’s 2019 analysis shows that the top four deepfake porn websites received more than 134 million views last year. Some videos were even requested; their creators can be paid in bitcoin.

The number of released videos and views per video continues to rise. People upload up to 1,000 deepfake videos to porn sites every month. Despite this increase, and with the total number of videos as of June 2020 being three times that of July 2019, porn sites are inadequately addressing the removal of illegal content.

The money-making potential of deepfakes, thanks to ad space on porn sites, is considerable. The sites’ inaction is unsurprising, especially given their substantial visitor numbers.

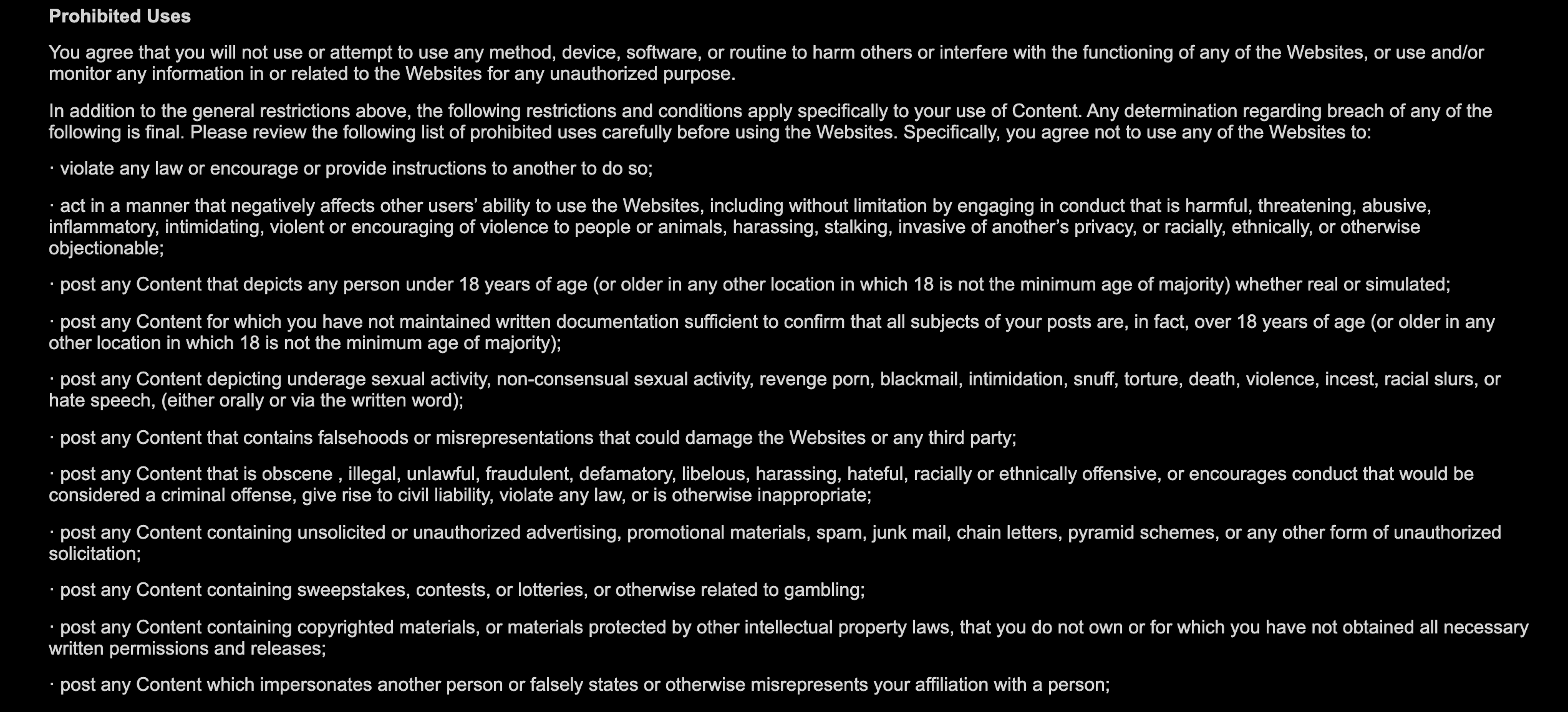

Note Pornhub’s “Prohibited Uses” information on their Terms of Service page in this screenshot taken in late September 2020:

Deepfakes porn violates a number of these terms, and yet, there are reportedly numerous deepfakes porn videos on Pornhub. The search bar reportedly auto-fills in “deepfakesporn” when a user types in just the first few letters of that word.

XVideos and Xnxx, porn sites that also host numerous deepfake videos, are two of the top three porn and two of the top ten overall websites in the world, and they have as many visitors or more visitors than Wikipedia, Amazon, and Reddit.

When asked about the increasing deepfake problem, Alex Hawkins, the VP of popular porn site xHamster, said that, while the company doesn’t have a specific policy for deepfakes, it treats them “like any other nonconsensual content.” More specifically, Hawkins said that the company removes videos if people’s images are used without permission:

“We absolutely understand the concern around deepfakes, so we make it easy for it to be removed. Content uploaded without necessary permission being obtained is in violation of our Terms of Use and will be removed once identified.”

Regardless, xHamster seemed to need WIRED’s help identifying dozens of videos appearing as deepfakes on the site. And, although such videos are widely considered to target, harm, and humiliate the women at their center, CEO and Chief Scientist at Sensity, Giorgio Patrini, says, “The attitude of these websites is that they don’t really consider this a problem.” Moreover, the number of people who are featured in deepfakes is increasing.

Patrini also added that Sensity has noticed a growing number of deepfakes featuring Instagram, Twitch, and YouTube influencers. This leads him to believe that the public will eventually and inevitably target these influencers for similar videos.

Why this matters

As the underlying AI technology for deepfake videos advances, it becomes cheaper and easier to use. Consequently, the risk of anyone ending up in such a video grows. This risk is magnified by popular porn sites, filled with deepfakes, refusing to take nonconsensual video creation seriously.

Clare McGlynn, a professor at the Durham Law School who specializes in porn regulations and sexual abuse images, agrees. “What this shows is the looming problem is going to come for non-celebrities,” she said. “This is a serious issue for celebrities and others in the public eye. But my longstanding concern, speaking to survivors who are not celebrities, is the risk of what is coming down the line.”

What it comes down to is this: even when porn is “not” exploitative because it uses AI-generated imagery, it is.

Although not facing literal sexual assault, survivors of nonconsensual video creation face many disruptive mental health issues that affect their daily lives—in some cases, there are striking similarities between the mental health effects of sexual assault and nonconsensual video creation for survivors.

When will we condemn the creation of deepfakes, considering the ongoing exploitation?

This is one of the many reasons we refuse to click on porn sites. They profit from the creation and distribution of nonconsensual content.

Will you join us?

Click here to learn what you can do if you’re a victim of revenge porn or deepfakes porn.