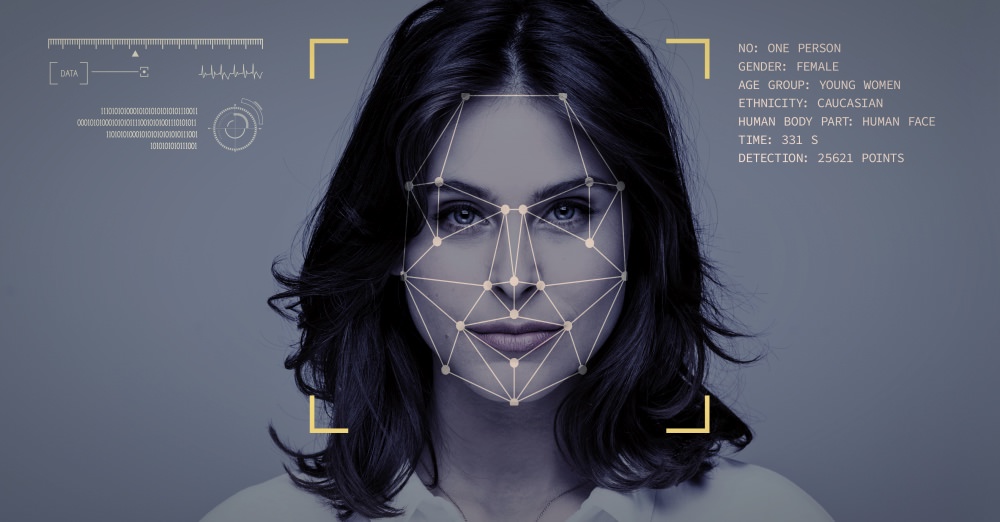

A man who goes by the pseudonym Li Xu claims to have spent six months developing a facial recognition tool that allows men to cross-reference women’s faces in porn videos with their real-life social media profiles like Facebook and Instagram.

The anonymous, Germany-based programmer recently posted about his new tech on Chinese social network Weibo, resulting in viral Twitter buzz and public uproar.

The goal? To expose women and help men “check if their girlfriends have ever been in porn.”

Xu claims the tech that collected over 100 terabytes of data was intended to help men identify “promiscuous” women who have been in sex videos, and that over 100,000 women who worked in the porn industry were “successfully identified on a global scale.”

Tech allegedly shut down after public backlash

While there’s no viable proof that this tech actually existed, (Xu never published his code or databases—just an empty GitLab page) he did promise to share the technical details in a live Q&A scheduled for June of this year, which was later canceled.

Due to a wave of public criticism, the programmer has since removed his Weibo posts and allegedly deleted the project and all its data. Xu also claims that after he developed the tech, he realized it could also have been used for women to check if sex videos of them had been uploaded without their consent.

Related: Revenge Porn: Is It A New Form Of Digital Sex Slavery?

Xu told news outlet Sohu, “I should not have announced the news in a rush. I should have made a more comprehensive plan, explained what the team wanted to do, and tried to win the support of relevant authorities.”

When asked if he was aware of any legal issues with the project, Xu responded that it shouldn’t have been a problem since he didn’t intend to publicly release the private data, and because sex work is legal where he lives in Germany.

Still, the programmer has been accused of “slut-shaming” and violating privacy laws by several prominent academics who discuss the potential implications of this technology.

Under the European Union’s General Data Protection Regulation (GDPR), the purposes for which personal data is collected need to be legitimate, and collecting data is illegal if individuals don’t consent.

Marcelo Thompson, an assistant professor specializing in privacy at the University of Hong Kong said the case was “a clear violation” of data protection laws in Europe and China.

According to Thompson, “Victimization of women because of their previous sexual lives strikes me as one of the most illegitimate purposes I can think of.”

Related: How The Porn Industry Profits From Privacy Violation And Voyeurism

While there aren’t federal privacy laws in the United States, California does have privacy legislation that would have made this type of data collection illegal. It would also be illegal for someone elsewhere to set up this database using California data, which would be difficult to avoid given that the porn industry is predominantly based in Los Angeles.

Still, enforcement of these laws can be tricky, and the emergence of this tech would leave people vulnerable in many other countries.

Where this porn-influenced tech could lead

Whether or not this programmer’s claims are factual or if his tech has really been shut down is beside the point in this much larger issue.

Many experts in machine learning and gender equality studies have coined this project as algorithmically-targeted harassment, like author Soraya Chemaly who tweeted:

“This is horrendous and a pitch-perfect example of how these systems, globally, enable male dominance. Surveillance, impersonation, extortion, misinformation all happen to women first and then move to the public sphere, where, once men are affected, it starts to get attention.”

Related: How Shaming And Victim-Blaming Porn Performers Adds To Their Mistreatment

Author and attorney Carrie A. Goldberg who specializes in sexual privacy violations says the possibility of this tech could put many people in real danger.

“Some of my most viciously harassed clients have been people who did porn, oftentimes one time in their life and sometimes nonconsensually [because] they were duped into it. Their lives have been ruined because there’s this whole culture of incels that for a hobby expose women who’ve done porn and post about them online and dox them.”

And Feng Yuan, co-founder of a Beijing-based nonprofit Equality that focuses on women’s rights and gender, says the project is discriminatory.

“It is clearly a double standard. Why wasn’t the program aimed at identifying men as well? It’s not as simple as the fact that some programmers discriminate against women. The double standard for gender exists widely in Chinese society.”

Databases of faces that can be used to target and expose women is a technology that’s well within reach at the consumer level. In fact, in 2017 Pornhub announced new facial recognition features that “make it easier for users to find their favorite stars.” And in 2018, online trolls began compiling databases of porn performers in an attempt to threaten and expose them.

Artificial intelligence inside and outside the porn industry

The fact that this type of tech could or does exist is frightening and is just one of several recent incidents that are sparking conversations about the ethical and legally responsible use of artificial intelligence.

Related: Not To Alarm You, But Studies Show DeepFake Videos Can Be Created Using Just One Image

Just one example is how easy it is to create deepfakes—or videos created using AI to insert faces of regular people onto the bodies of porn performers. The overarching theme with porn-influenced AI today is controlling and extorting women’s bodies for sexual pleasure.

Danielle Citron, professor of law at the University of Maryland who studies the aftermath of deepfakes tweeted this about Xu’s new tech:

Consider the dark paths where porn-fueled tech can lead—even beyond just snooping into a partner’s past. This is yet another example of the negative impact porn has on society, individuals, and relationships.

Porn significantly contributes to a culture where privacy lines are at the very least blurred, if not non-existent. Exploitation, victim-blaming, and even shaming performers—many of whom are manipulated, coerced, or forced against their will to perform in porn or do extreme sex acts—have become the norm.

When will the negative consequences be enough for society at large to understand that porn and the attitudes and behaviors it normalizes are harmful?

The simple fact is, it starts with the individual—and it can start with you.

Your Support Matters Now More Than Ever

Most kids today are exposed to porn by the age of 12. By the time they’re teenagers, 75% of boys and 70% of girls have already viewed itRobb, M.B., & Mann, S. (2023). Teens and pornography. San Francisco, CA: Common Sense.Copy —often before they’ve had a single healthy conversation about it.

Even more concerning: over half of boys and nearly 40% of girls believe porn is a realistic depiction of sexMartellozzo, E., Monaghan, A., Adler, J. R., Davidson, J., Leyva, R., & Horvath, M. A. H. (2016). “I wasn’t sure it was normal to watch it”: A quantitative and qualitative examination of the impact of online pornography on the values, attitudes, beliefs and behaviours of children and young people. Middlesex University, NSPCC, & Office of the Children’s Commissioner.Copy . And among teens who have seen porn, more than 79% of teens use it to learn how to have sexRobb, M.B., & Mann, S. (2023). Teens and pornography. San Francisco, CA: Common Sense.Copy . That means millions of young people are getting sex ed from violent, degrading content, which becomes their baseline understanding of intimacy. Out of the most popular porn, 33%-88% of videos contain physical aggression and nonconsensual violence-related themesFritz, N., Malic, V., Paul, B., & Zhou, Y. (2020). A descriptive analysis of the types, targets, and relative frequency of aggression in mainstream pornography. Archives of Sexual Behavior, 49(8), 3041-3053. doi:10.1007/s10508-020-01773-0Copy Bridges et al., 2010, “Aggression and Sexual Behavior in Best-Selling Pornography Videos: A Content Analysis,” Violence Against Women.Copy .

From increasing rates of loneliness, depression, and self-doubt, to distorted views of sex, reduced relationship satisfaction, and riskier sexual behavior among teens, porn is impacting individuals, relationships, and society worldwideFight the New Drug. (2024, May). Get the Facts (Series of web articles). Fight the New Drug.Copy .

This is why Fight the New Drug exists—but we can’t do it without you.

Your donation directly fuels the creation of new educational resources, including our awareness-raising videos, podcasts, research-driven articles, engaging school presentations, and digital tools that reach youth where they are: online and in school. It equips individuals, parents, educators, and youth with trustworthy resources to start the conversation.

Will you join us? We’re grateful for whatever you can give—but a recurring donation makes the biggest difference. Every dollar directly supports our vital work, and every individual we reach decreases sexual exploitation. Let’s fight for real love: