You know how you can face-swap with just about anything using filters from Snapchat or Instagram? And it usually results in something hilarious?

Now, imagine that face-swapping concept, but with the most graphic porn available and regular people like you or your friends. Not so funny, right?

Introducing “deepfakes”

It’s known as “deepfakes” or “deepfake porn.” This growing trend creates fake pornography that goes viral online, victimizing celebrities and innocent social media users. Victims such as law student Noelle Martin, engineering students Julia and Taylor, high school student Francesca, Twitch Star QTCinderella, and international popstar Taylor Swift

In the darker corners of the World Wide Web, deepfakes creators are taking orders and selling custom-made clips like it’s the next big thing, and as the technology continues to improve, the demand is on the up and up, and the product is getting more convincing.

What is “deepfake” porn?

Deepfake pornography exploits user-friendly AI technology. It grafts non-consenting individuals’ faces onto explicit material, generating fake pornographic content like images, videos, and GIFs. The outcome is convincing, nonconsensual content depicting intimate, explicit acts created without permission from the portrayed person.

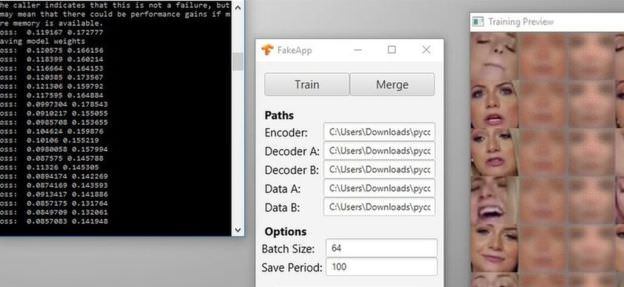

The term was created by Reddit user “deepfakes,” who started posting pornographic celebrity videos using this face-swapping technology, including Gal Gadot, Scarlett Johansson, and Aubrey Plaza. The Windows program made it easy to create videos with enough photos of their subject, which is easy to do with celebrities:

FAKEAPP

This type of pornography is closely related to revenge porn or hacked celebrity photos, which can be weaponized to humiliate a person and ruin their identity or reputation. And although revenge porn and hacked photos are different than deepfakes—because the original material doesn’t need to be altered—the ethical issues of consent and objectification of victims are similar.

Wait, what does this mean?

Deepfake porn usually features actresses or celebrities. Accessible software allows anyone to stitch footage easily, expanding the potential victim pool globally. This includes individuals with a social media or online presence, essentially much of the known world. Therefore, it’s likely time to enhance those privacy settings.

To add insult to a severe invasion of privacy, there aren’t legal ramifications for users who upload this nonconsensual content since they aren’t made from illegally stolen intimate photos, and as one reporter in Wired wrote, “You can’t sue someone for exposing the intimate details of your life when it’s not your life they’re exposing.”

As this technology becomes common, authenticity in various videos, not just explicit ones, will consistently be in question. Video evidence could become inadmissible in court, while pornographic material could exploit unwitting celebrities and social media users. Dangerously blurring reality and mimicry, this trend risks victimizing anyone, from celebrities to yourself, by its creators.

So, how can we fight this technological tyrant if we can?

How do we fight back?

Technological advances can be used for both the progressive and digressive. For instance, the same AI technology used to create this nonconsensual content also created Princess Leia’s AI face at the end of Rogue One, brought Paul Walker back to the big screen in Furious 7, and was used to plaster Nicolas Cage’s face all over iconic movie scenes—which, let’s face it, is meme gold.

But there is nothing funny or acceptable about ruining someone’s image for sexual entertainment.

While notable porn sites like Pornhub have previously promised to ban deepfakes porn, their users are still managing to upload and spread these videos and images at a crazy rate. Other sites where deepfake porn has become popular are taking a more aggressive approach, like the gif site, Gfycat, which says it’s figured out a way to train artificial intelligence to spot fraudulent videos. With this developing technology, Gfycat—which has at least 200 million active daily users—hopes to bring a more comprehensive approach to kicking deepfakes off their site for good.

Websites like Reddit and Twitter rely on users to report deepfake content on their platforms. The system isn’t highly developed, but, at least, it demonstrates their stand against this unacceptable content.

We fight against porn and sexual exploitation because we don’t believe anyone’s violation should be sexual entertainment. Join us, and choose to create a culture that stops the demand for nonconsensual deepfakes, pornography, and sexual exploitation altogether.

Get Involved

Expose the porn industry for what it is and speak out against this harmful trend. SHARE this article to spread the word on the harms of porn and add your voice to the conversation.

Spark Conversations

This movement is all about changing the conversation about pornography and stopping the demand for sexual exploitation. Repping a tee sparks vital conversations about porn’s harms, inspiring lasting change in lives and our world. Are you in? Check out all our styles in our online store, or click below to shop: