Child exploitation images—also commonly known as child porn. It’s a term that you might hear more about these days in the news, in conversations, or from celebrity platforms. The idea of sexual exploitation imagery of children being traded like baseball cards and used as currency in some child abuser circles is horrifying, but how exactly does all of that happen if child porn is illegal?

In other words, can you stumble upon child porn just by typing the term into a search bar, or is it more complex than that?

The short answer is it’s both. The longer answer is that there have been many reports of child sexual abuse material (CSAM) being freely posted and shared on social media, and also, less-accessible means of sharing it have become more widely used. As porn crossed from print onto the digital stage, the volume, speed, and diffusion of pornography, specifically CSAM, increased tremendously. This has been greatly supported and fueled by the use of chat rooms, websites, and in recent years, peer-to-peer (P2P) networks that are hosted on the dark web.

Related: U.S. Officials Arrest 2,300 Alleged Child Pornographers & Traffickers In Undercover Operation

Over 9 years, between 2000-2009, the amount of child exploitation imagery offenses using P2P networks rose 57%, based on cases of those convicted. In the last couple of years, people have preferred storage platforms like iCloud to file sharing P2Ps but we still have a problem, especially because not a lot of research has been done on the subject.

What we do know though, is worth looking into.

What exactly is P2P and how does it work?

Let’s back up for a minute. What exactly is a peer-to-peer network and what does it have to do with CSAM?

P2Ps, as they’re often called, are file-sharing platforms that allow for larger files like music, books, shows, and movies to be shared quickly and relatively anonymously. They include programs like Ares, Gnutella, eDonkey, and BitTorrent.

Once you download a program, you request a file from the millions of other global users, and pieces of the file you want are downloaded onto your computer from the computers of many different “peers” with that same file. This “breaking up” of the download allows for fast and more anonymous access to content.

They also allow for a lot of other, more sketchy material to slide by, like copyrighted content or CSAM. P2Ps are especially critical in the dark, underground CSAM trade because they are international networks, completely public, and allow for easy access to child porn. But how?

Related: How Mainstream Porn Fuels Child Exploitation And Sex Trafficking

They avoid the use of servers that could “supervise,” they break up the potential illegal material download amongst many users—making it hard to find the initial source of the pornography—and they don’t require giving up personal information from a credit card because, conveniently, they’re completely free. [1]

When you join a P2P network—which by the way, account for 10% of internet traffic—you’re almost guaranteed to find CSAM if you try, and even if you don’t.

Investigators have found that when they used keywords to find pornographic content on P2P networks, 44% involved CSAM. However, sometimes unsuspecting terms are used to disguise the CSAM, though often that’s not the case, because titles to child exploitation files can be found by searching by age and sex act. [2]

What does this imply?

Thankfully, it appears that file-sharing programs are on the decline in some regions; however, it’s still prominent and has peaked in the last several years with almost 30% of internet traffic in Asian and African regions.

So what does this mean? It all has to do with the proliferation of CSAM. Every time a download is requested, a duplicate of the illegal image or video is created and added to a deposit that is shareable and allows for its continued circulation indefinitely. [3]

What are the numbers?

There have been studies conducted to try and gauge the impact of CSAM within P2Ps, and the findings are telling.

Based on an investigative tool called RoundUp, created in connection with law enforcement and used to identify CSAM within P2Ps, the results show that over 30% of the 775,941 computers examined found with known CSAM files solely on Gnutella were based in the United States. [4] Counting duplicate files, close to 130,000 child porn files were shared globally on Gnutella in one year, 36% of the total—and that’s just one P2P network.

Related: 400 Children Rescued/348 Adults Arrested After Police Take Down Multimillion Child Porn Empire

This means the content keeps growing as it continues to be shared.

Remember we said how easy it is to access porn on P2Ps? Well, it’s true that many can even stumble upon it, or at least gain access with much greater ease because of just how completely simple it is. It’s a sad fact that teenagers are also amongst the biggest users of P2Ps, so it’s no surprise they could accidentally come across CSAM, or gain access they would usually never have.

So, what can be done?

P2Ps’ popularity seems to fluctuate with time. Even still, with the limited information we have, we see how CSAM accessibility has only increased with these dark web networks as a greater stockpile of exploitation imagery is collected, ready to be duplicated and shared again and again.

Law enforcement is working on developing more tools like RoundUp to help identify the culprits, but while P2Ps’ structure facilitates that search in some ways, they also make it quite challenging in others. Even limitations in the studies conducted make it difficult to really grasp the reality of the situation and how much CSAM is truly associated with these networks.

Related: How Porn Fuels Sex Trafficking

P2Ps can be great facilitators of content, but they can cause a lot of harm, too. Illegal content and CSAM are definitely not the kind of things we can be supporting, even if we aren’t actively engaging in its download or distribution.

CSAM is abuse, and unfortunately, it’s a growing, worldwide problem. Thorn has identified P2Ps as, the “main trading platform for this content,” and is striving to combat it directly on these file-sharing networks. Keep in mind that these networks aren’t the only way CSAM is shared, but it is one of the main ways. Like we said before, social media platforms are other very common and very popular ways to share this illicit content—as well as mainstream porn sites.

Now that you know how child exploitation imagery can be traded and transferred across the internet, you can know how best to avoid it and how to raise awareness on how it’s spread.

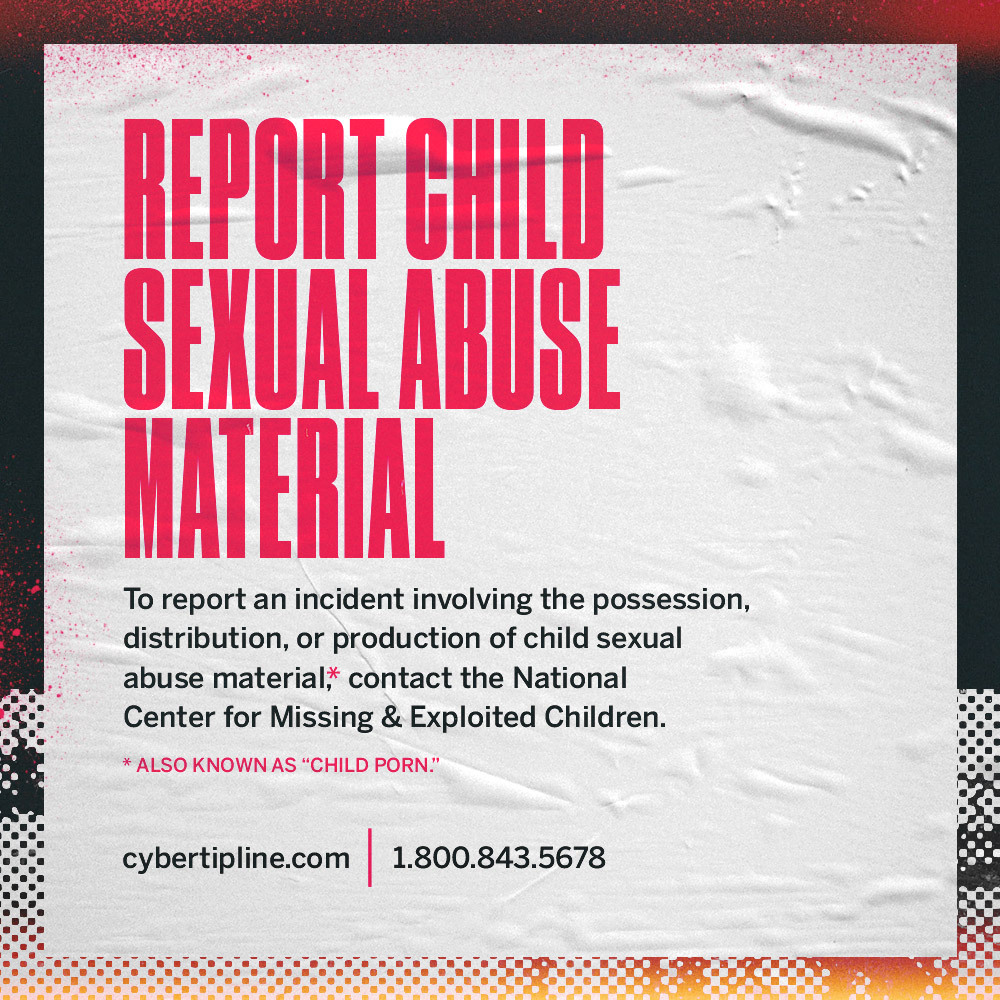

Click here if you or someone you know has stumbled upon CSAM.