Trigger warning: This post contains descriptions of child sexual exploitation. Reader discretion is advised.

In a recent investigation, Forbes discovered many “private” TikTok accounts that may seem harmless at first glance but are portals to some of the most dangerous, disturbing, and exploitative content online—including illegal child sexual exploitation material (CSEM).

The posts are easy to find and appear like advertisements, with luring invitations like “Don’t be shy, girl.” “Come and join my post in private.” “Let’s have some fun.”

The content is posted in private accounts using a setting visible only to the logged-in person. And while TikTok’s security policies prohibit users from sharing their login credentials with others, it does not require a two-step verification (it’s set to“off” by default), making it extremely easy to do so with a simple password. And Forbes discovered that’s exactly what’s happening.

The user simply asks the account owner or another user for the password, and boom—they’re in. From the outside, the account looks innocuous. But on the inside, there are graphic videos of minors stripping naked, masturbating, and engaging in other exploitative acts.

One of the biggest social media platforms in the world

The Forbes report uncovered how easily underage victims of sexual exploitation and predators are meeting and sharing illegal content on one of the biggest social media platforms in the world that claims to have a “zero tolerance” policy for child sexual abuse material.

In fact, the sheer volume of post-in-private accounts that Forbes identified was shocking—highlighting the major blind spot where TikTok moderation is falling short and failing to enforce its own policies. Forbes also found that when accounts are banned, new ones pop up at a rapid frequency.

Of course, TikTok isn’t the only social media platform hosting illegal or violent activity. But what’s especially concerning is TikTok’s popularity among young people. More than half of U.S. minors use the app once a day, making the pervasiveness of this issue and its apparent targeting of underage minors especially alarming.

Child sexual exploitation on TikTok

Seara Adair, a child sexual abuse survivor who has built a following on TikTok by drawing attention to the exploitation of kids on the app, was among the first to discover this “posting-in-private” issue.

Someone was logged into a private TikTok account @My.Privvs.R.Open, and tagged Adair in a video of a pre-teen “completely naked and doing inappropriate things.” But when Adair used TikTok’s reporting tools to flag the video for “pornography and nudity,” she received an in-app alert saying, “we didn’t find any violations.”

That’s what started the first of many viral TikTok videos Adair has posted calling attention to these illicit private accounts. Her efforts have even piqued the interest of state and federal authorities.

Adair shared, “There are quite literally accounts that are full of child abuse and exploitation material on their platform, and it’s slipping through their AI. Not only does it happen on their platform, but quite often it leads to other platforms—where it becomes even more dangerous.”

So how is this “posting in private” phenomenon—or “Only Me” mode, as many users know it—so easy to find, yet its slipping through the cracks of TikTok moderation?

“Post-in-Private”

One way is that users increasingly use code words known as “algospeak” to evade detection by content moderation technology.

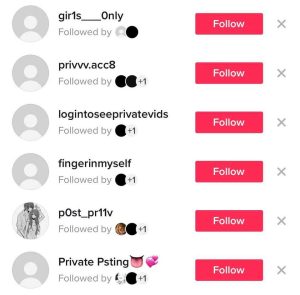

Deliberate typos or slang in search phrases, hashtags, and account names return hundreds of exploitative accounts and invitations to join—“prvt,” “priv,” “postprivt,” #postinprvts are just a few examples. Some posts also included #viral or #fyp (short for “For You Page”)—popular feeds that attract more than a billion users on TikTok.

Forbes also found that within days of an active TikTok user following a few of these private accounts, the app’s algorithm started recommending dozens more with similar bios like “pos.t.i.n.privs” and “logintoseeprivatevids.” The suggestions popped up frequently in the user’s “For You” feed with a one-click button to follow the accounts.

In the Forbes investigation, users received login information for several post-in-private handles. If there was a vetting process before receiving the credentials, it focused on pledges to contribute images. Multiple accounts were recruiting girls ages “13+” and “14-18.”

One account contained over a dozen concealed videos featuring young girls who appeared to be underage. In one post, a young girl was seen slowly removing her school uniform and underwear until she was naked, despite TikTok’s claim not to allow “content depicting a minor undressing.” Other videos included a young girl masturbating and two young girls taking off their shirts and bras and fondling their breasts.

Girls claiming to be as young as 13 also frequently asked to be let in and participate in these secret groups.

Another concern is users’ bios and comments directing people to move private posting from TikTok to other platforms like Discord and Snap, despite TikTok explicitly forbidding content that “directs users off the platform to obtain or distribute CSAM.”

One user named Lucy, claiming to be 14, had a link to a Discord channel in her TikTok bio along with “PO$TING IN PRVET / Join Priv Discord.” The link led to a Discord channel of a couple of dozen people sharing pornography of, primarily females of various ages. Several of the Discord posts had what appeared to be underage nude girls masturbating or performing oral sex and a TikTok watermark—suggesting that the videos had originated or were shared there.

TikTok’s “safety policies”

Haley McNamara, director of the International Centre on Sexual Exploitation, told Forbes that in addition to Snap and Discord, similar behavior is present on Instagram through the closed groups or close friends feature.

“There is this trend of either closed spaces or semi-closed spaces that become easy avenues for networking of child abusers, people wanting to trade child sexual abuse materials. Those kinds of spaces have also historically been used for grooming and even selling or advertising people for sex trafficking.”

McNamara added that while on paper, TikTok has strong safety policies to protect minors, “what happens in practice is the real test.” When it comes to proactively combatting the sexualization of kids or trading of child sexual abuse material, “TikTok is behind,” she added. New tools or features roll out on apps like TikTok without seriously considering only safety, especially for children.

TikTok claims to have zero tolerance for child sexual abuse material and that they strictly prohibit it on the platform, and says when they become aware of any content, they immediately remove it, ban accounts, and make reports to the National Center for Missing & Exploited Children. They also claim that all videos posted to the platform—both public and private, including those only viewable to the person inside the account and even direct messages—are subject to TikTok’s AI moderation and additional human review in some cases.

However, when Forbes used the app’s tool to flag several accounts and content containing violative material and promoting and recruiting underage people to post-in-private groups, it all came back with “no violation.”

Among those questioning TikTok’s decision not to have default two-factor authentication (an industry standard) is Dr. Jennifer King—privacy and data policy fellow at the Stanford Institute for Human-Centered Artificial Intelligence and creator of a tool Yahoo uses to scan for CSAM.

“That’s a red flag, and you can absolutely know this is happening. It’s often a race against time: You create an account, and you either post a ton of CSAM or consume a bunch of CSAM as quickly as possible before the account gets detected, shut down, reported… it’s about distribution as quickly as possible.”

She said people in this space only expect to have these accounts for just a few hours or days, and that spotting and blocking these unusual or frequent logins would not be difficult for TikTok to do.

TikTok’s response

TikTok told Forbes that although it has a policy prohibiting sharing login credentials, they allow it on some accounts—particularly those with big followings or for accounts managed by publicists or social media strategists.

Clearly, this policy is not only being bypassed in many cases but simply isn’t enforced.

Adair expressed her frustration that she is doing much of TikTok’s content moderation for them. Her efforts to contact TikTok and even individual employees via LinkedIn have been unsuccessful. “[I’ve] never heard back from anybody. Not a single person.”

However, she continues to hear from TikTok users—including many young girls who have had issues with post-in-private. “Almost every single minor that has reached out to me has not told their parents what has happened.”

It’s essential for platforms like TikTok to stay current when it comes to the ever-changing ways people are exploiting technology. They must back their policies up with action, to put a stop to the pervasive issue of child sexual abuse online.

It may seem like an impossible and ever-changing task, but acknowledgment, awareness, and action are good places to start.

Why this matters

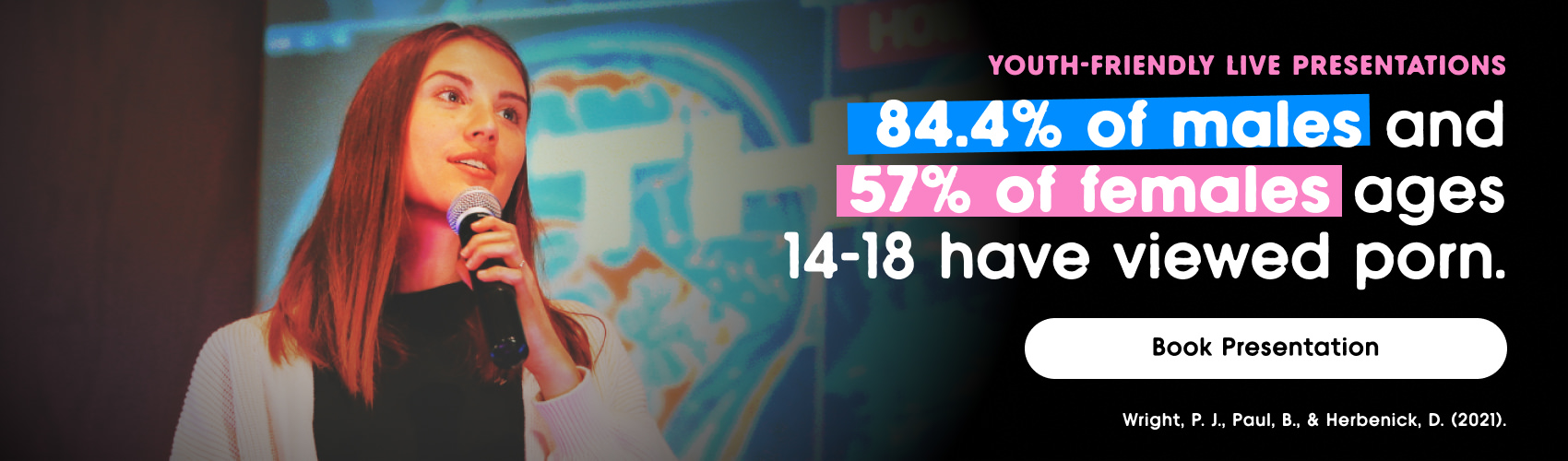

Data show us just how much porn has taken over the internet and social media platforms, especially for minors.

It’s no secret that porn is everywhere, and now it seems to have taken hold of our Discovery pages on Instagram, our feeds on Facebook and Twitter, and even on TikTok.

And if you’re a parent and you do ultimately catch your child watching porn, do not shame them. Ask them questions about how they feel, and listen to their answers. Point out the differences between porn sex and healthy sex—and point out that the former lacks intimacy, connection, and boundary setting.

While some pornographic social media accounts will ask users if they are over 18, teens can simply lie to get around these flimsy age restrictions. Though parents can install programs on kids’ devices that block pornography, today’s tech-savvy kids know how to get around these (and they can always use their friends’ unblocked phones instead).

The reality is that most teenagers will inevitably be exposed to porn—and parents must talk to them about it.

How to report CSEM

The common theme we’re seeing: exposure to and participation in child sexual abuse images further guarantees the survival of the industry. It’s time to break the cycle.

Without eliminating the industry, we can not hope to eliminate the paralleled abuse and exploitation. Now, more than ever, it’s time for us to raise our voices against this tide of abuse and stop the demand for it. Together, our voices are loud. Together, we must speak out against sexual exploitation. Are you with us?

To report an incident involving the possession, distribution, receipt, or production of child sexual abuse material, file a report on the National Center for Missing & Exploited Children (NCMEC)’s website at www.cybertipline.com, or call 1-800-843-5678.

Support this resource

Thanks for reading our article! Fight the New Drug is a registered 501(c)(3) nonprofit, which means the educational resources we create are made possible through donations from people like you. Join Fighter Club for as little as $10/month and help us educate on the harms of porn!

JOIN FIGHTER CLUB