The National Center for Missing & Exploited Children (NCMEC) whose CyberTipline is the nation’s centralized reporting system for the online exploitation of children, reported a staggering rise in reports of child sexual abuse material (CSAM) last year.

Numbers were through the roof in 2020 compared to previous years, which isn’t surprising, of course, given everyone staying home and isolated amidst the spread of a global pandemic. But what’s particularly illuminating is a rising trend in the source of many of these images, and what it means for the future of fighting this global issue.

Let’s take a deeper look.

Related: Report Reveals One-Third Of Online Child Sex Abuse Images Are Posted By Kids Themselves

The rise of CSAM in 2020

Here’s a rundown of what NCMEC saw in reports of child sexual abuse material in 2020 compared to 2019.

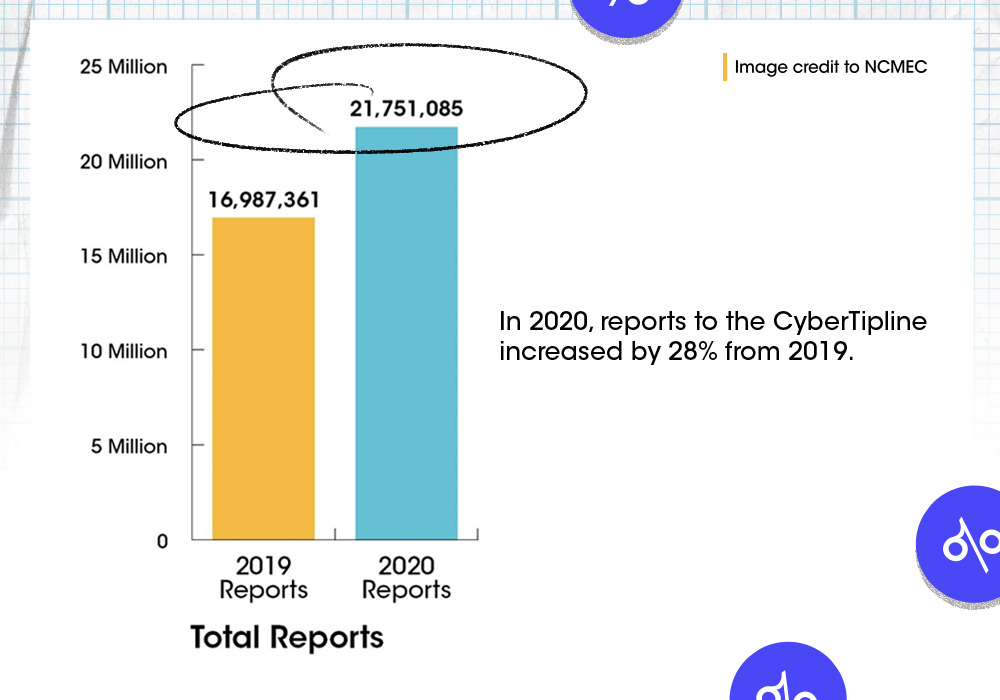

Total reports to the CyberTipline increased by 28%—from just under 17 million in 2019 to nearly 21.8 million in 2020.

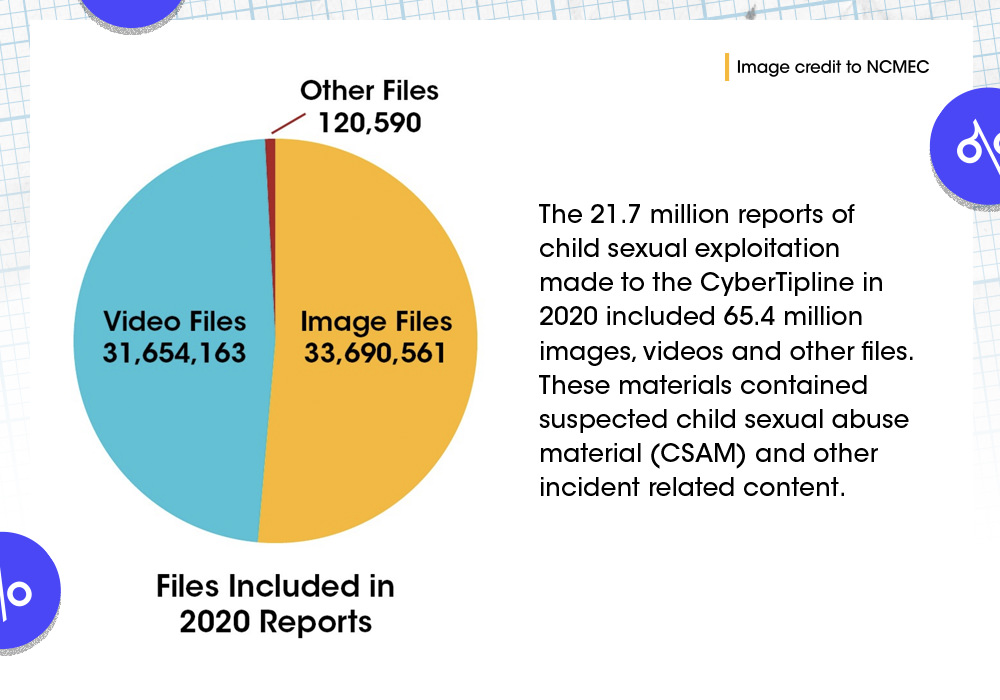

The 21.7 million reports of child sexual exploitation made to the CyberTipline in 2020 included 65.4 million images, videos, and other files.

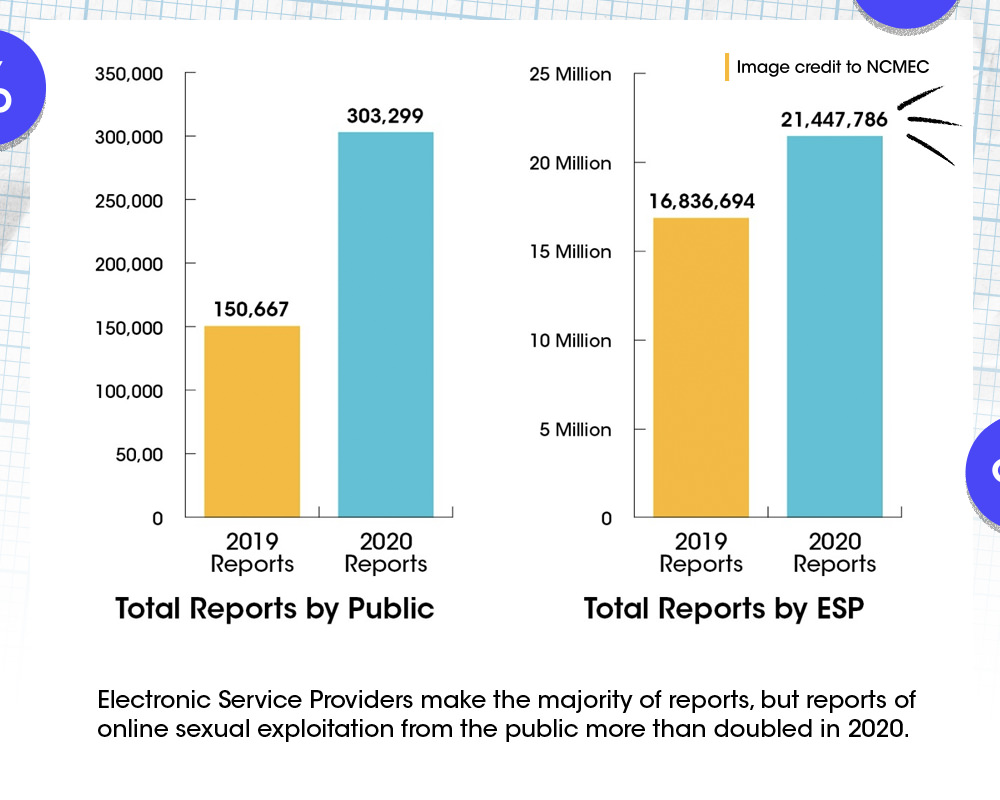

Year after year, the majority of reports to the CyberTipline come from the over 1,400 Electronic Service Providers (ESP) registered with NCMEC to voluntarily report instances of suspected child pornography they become aware of on their platforms.

However, reports of online sexual exploitation from the public more than doubled in 2020—from over 150,000 in 2019 to over 303,000 in 2020.

Of the ESPs that reported to NCMEC in 2020, Facebook—including other platforms the company owns like Instagram and Whatsapp—topped the list by a longshot with over 20.3 million reported instances of child sexual abuse material in 2020.

Note that the large number of reports from tech companies like Facebook do not necessarily mean there is more abusive content on those platforms, but that the company is doing a better job at identifying and reporting it. Higher numbers, in those cases, can actually be a positive thing—it means they’re more thoroughly reporting, in some cases.

Also on the list was Google (546,704), Snapchat (144,095), Twitter (65,062), TikTok (22,692) Microsoft (96,776) Amazon/Twitch (2,235), and MindGeek (13,229)—parent company of multiple adult content companies including Pornhub, YouPorn, RedTube, Brazzers—among others.

Related: MindGeek, Pornhub’s Parent Company, Sued For Reportedly Hosting Videos Of Child Sex Trafficking

Of course these numbers account for reports, not individual confirmed cases of abuse. But the rampant spread of child sexual abuse content online is not an issue to downplay.

President and CEO of NCMEC John Clark explained that in the year 2020, 10.4 million of the nearly 21.7 million CSAM reports were unique images that had been reported numerous times. “This shows the power of the technology ESPs use to identify these known images of abuse. It also demonstrates the repetitive exploitation occurring, as these images are shared many times, further re-victimizing the children depicted.”

Facebook also conducted an analysis of the CSAM it reported, and found that a large portion was duplicates via reposts and shares—allegedly with copies of six videos responsible for over half of the child sexual abuse content reported.

Pornhub—which has allegedly only recently started reporting child abuse and nonconsensual content on the site in the last few months—also claimed instances of duplicate content, estimating 4,171 of the 13,229 were unique reports.

Related: Visa And Mastercard Sever Ties With Pornhub Due To Abusive Content On The Site

The global issue of self-generated CSAM

This unprecedented rise in child sexual abuse material is concerning to say the least, but to really be effective at fighting it, we have to understand the reality of what we’re dealing with.

Never should an image of a child be shared to porn sites—regardless of origin, these images pose a serious risk to children when they get into the wrong hands—but it matters to know that not all of it is abusive. A lot of CSAM is actually created by minors themselves.

In fact, in a NetClean report from 2018, 90% of police officers investigating online child sexual abuse said it was common or very common to find self-generated sexual content during their investigations.

Thorn recently reported research on self-generated CSAM and the complex challenges it brings. (You can download Thorn’s full report here).

Some self-generated images represent a child who was groomed or coerced, while many teens today “share nudes” supposedly willingly. But why?

Related: Report Reveals One-Third Of Online Child Sex Abuse Images Are Posted By Kids Themselves

Through their research, Thorn found 3 critical themes that provide a deeper understanding.

1: 40% of teens agree that it’s normal for kids their age to share nudes with each other, and don’t view it as fundamentally harmful. This attitude of, “We all do it. It’s ok,” proves to be the norm for many teens who often view sexting or sending nudes as common behavior or an acceptable way to engage intimately with a partner.

2: 1 in 3 teens say they’ve seen non-consensually shared nudes—this is likely an underreported number given the sensitivity of the topic and the fear many kids have of potential consequences. Most teens who share nude content of themselves probably don’t anticipate it will end up in anyone’s hands besides the intended recipient, but this is happening at an alarming rate. And many youth are navigating this digital world and its accompanying risks with little or no guidance from adults.

3: 60% of kids place at least some of the blame on the victim. This culture of victim-blaming makes it significantly more difficult for kids to seek help if an image is shared of them without their consent. Kids anticipate being blamed first, and caregivers tend to share the same view—with 50% of female caregivers and 60% of male caregivers exclusively or predominantly blaming the child whose image was leaked.

Holding the porn industry accountable

Of course a conversation about CSAM can’t be had without shining light on the industry that fetishizes, normalizes, and in many cases, facilitates the abuse of minors. Consuming porn isn’t a passive, private pastime—it fuels the demand for sexual exploitation and is intricately woven into this global issue as a whole.

There’s still much to learn, and many more conversations to be had. But thanks to research from organizations like NCMEC, Thorn, and others, and Fighters like you around the world, we can take real steps toward a world free from sexual exploitation.

To report an incident involving the possession, distribution, receipt, or production of child sexual abuse material, file a report on the National Center for Missing & Exploited Children (NCMEC)’s website at www.cybertipline.com, or call 1-800-843-5678.

Your Support Matters Now More Than Ever

Most kids today are exposed to porn by the age of 12. By the time they’re teenagers, 75% of boys and 70% of girls have already viewed itRobb, M.B., & Mann, S. (2023). Teens and pornography. San Francisco, CA: Common Sense.Copy —often before they’ve had a single healthy conversation about it.

Even more concerning: over half of boys and nearly 40% of girls believe porn is a realistic depiction of sexMartellozzo, E., Monaghan, A., Adler, J. R., Davidson, J., Leyva, R., & Horvath, M. A. H. (2016). “I wasn’t sure it was normal to watch it”: A quantitative and qualitative examination of the impact of online pornography on the values, attitudes, beliefs and behaviours of children and young people. Middlesex University, NSPCC, & Office of the Children’s Commissioner.Copy . And among teens who have seen porn, more than 79% of teens use it to learn how to have sexRobb, M.B., & Mann, S. (2023). Teens and pornography. San Francisco, CA: Common Sense.Copy . That means millions of young people are getting sex ed from violent, degrading content, which becomes their baseline understanding of intimacy. Out of the most popular porn, 33%-88% of videos contain physical aggression and nonconsensual violence-related themesFritz, N., Malic, V., Paul, B., & Zhou, Y. (2020). A descriptive analysis of the types, targets, and relative frequency of aggression in mainstream pornography. Archives of Sexual Behavior, 49(8), 3041-3053. doi:10.1007/s10508-020-01773-0Copy Bridges et al., 2010, “Aggression and Sexual Behavior in Best-Selling Pornography Videos: A Content Analysis,” Violence Against Women.Copy .

From increasing rates of loneliness, depression, and self-doubt, to distorted views of sex, reduced relationship satisfaction, and riskier sexual behavior among teens, porn is impacting individuals, relationships, and society worldwideFight the New Drug. (2024, May). Get the Facts (Series of web articles). Fight the New Drug.Copy .

This is why Fight the New Drug exists—but we can’t do it without you.

Your donation directly fuels the creation of new educational resources, including our awareness-raising videos, podcasts, research-driven articles, engaging school presentations, and digital tools that reach youth where they are: online and in school. It equips individuals, parents, educators, and youth with trustworthy resources to start the conversation.

Will you join us? We’re grateful for whatever you can give—but a recurring donation makes the biggest difference. Every dollar directly supports our vital work, and every individual we reach decreases sexual exploitation. Let’s fight for real love: