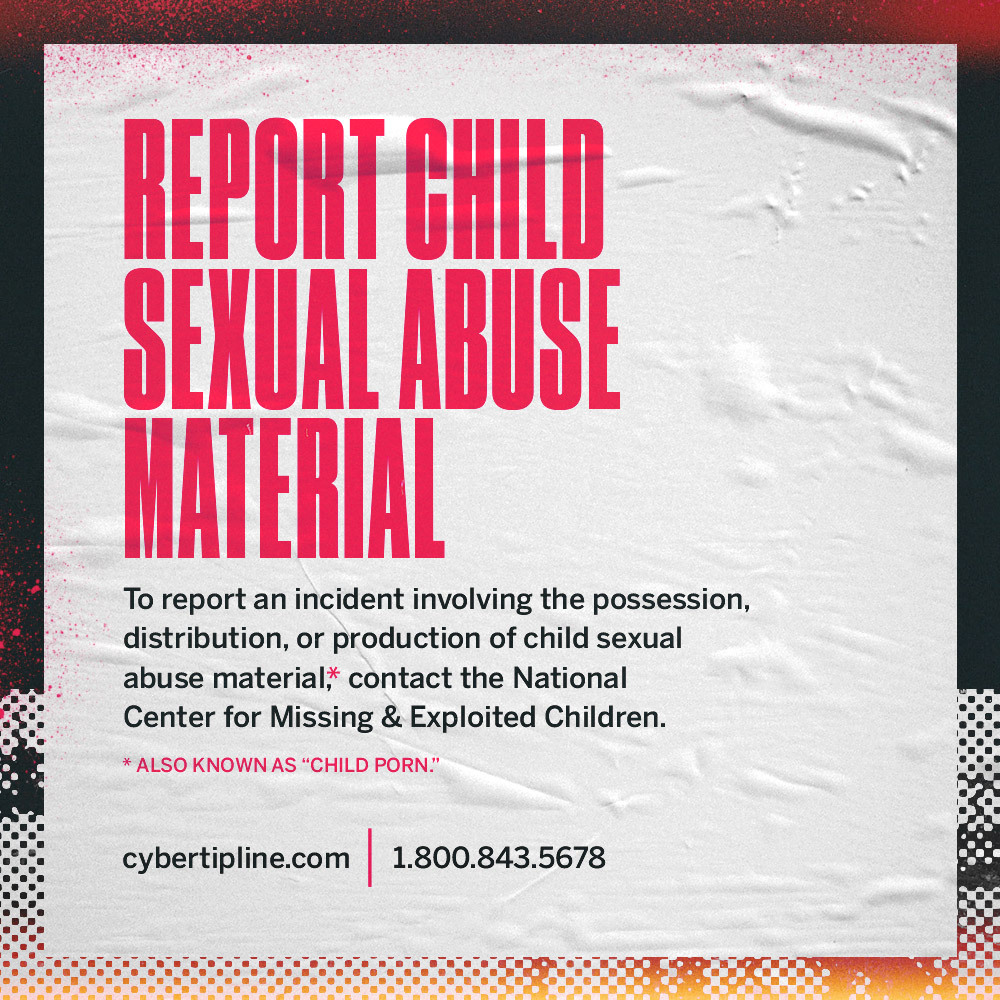

Disclaimer: Fight the New Drug is a non-religious and non-legislative awareness and education organization. Some of the issues discussed in the following article are legislatively-affiliated. Though our organization is non-legislative, we fully support the regulation of already illegal forms of pornography and sexual exploitation, like child sexual abuse material, and we support the fight against sex trafficking.

The European Union (EU) recently updated their digital laws to extend and improve personal privacy, but as a result, victims of child sexual abuse may be at risk. This is big news because similar policies might happen in the United States, too.

The European Electronic Communications Code (EECC) makes it potentially illegal for technology companies to continue their current processes of scanning for child sexual abuse material, which they then report to national organizations across Europe and to the CyberTipline operated by the National Center for Missing and Exploited Children (NCMEC) in the United States.

One of the intentions of the newly enacted EECC was to protect people’s privacy by reigning in the power of tech companies to scan private communications. This was accomplished by broadening the definition of an Electronic Communications Service so more companies, including WhatsApp, Facebook, and Skype, now must comply with this EU privacy law.

Privacy advocates say mass screenings of private communications is a privacy violation, while child protection advocates argue this screening identifies countless child abuse victims and their perpetrators as well as recovering the necessary evidence to charge abusers.

Data from @missingkids shows a sharp, over 50% decrease in reports of online child sexual exploitation in the #EU. The stakes couldn’t be higher. The #EU must reach an agreement now to restore automated detection methods that have been used for years #ChildSafetyFirst https://t.co/OacydtkFh4

— Thorn (@thorn) February 17, 2021

What this means for anti-child exploitation efforts

Every year, 17 million voluntary reports of child sexual abuse material (CSAM) and grooming are made to authorities, and nearly 3 million images and conversations come from the EU. The situation has become even more dire during the COVID-19 crisis due to a sharp increase in child exploitation happening during lockdowns. What was already an uphill battle to fight child exploitation could become worse from these unintended policy consequences.

Currently, tech companies use what’s called “hashing technologies” to compare images with a database of confirmed child abuse material. If an email attachment matches an image from that database, companies like Google or Microsoft will report that email to the NCMEC’s CyberTipline. This process works well for confirmed abuse material, but it does not necessarily catch new instances of abuse where the victim is not yet identified.

Following the news about the EECC, Australia, New Zealand, Canada, the UK, and the US jointly declared that the law will prevent companies doing this routine scanning and thus make it easier for children to be sexually exploited without detection. It could even make it impossible for law enforcement to investigate and prevent such abuse.

Their statement pointed out that the majority of reports to the NCMEC are from messaging services, and the abusive material is found with the use of hashing technologies that under the EECC as it stands would no longer be permitted.

“This is not the time to give predators a free pass to share videos of abuse and rape,” said Susie Hargreaves, Chief Executive of Internet Watch Foundation (IWF), the UK-based charity responsible for finding and removing CSAM online. “This is a stunning example of getting legislation wrong and having a potentially catastrophic impact on children… Anything which weakens protections for children is unacceptable.”

Already, we are seeing the effects child advocates were concerned about. The NCMEC compared EU-related CyberTipline reports from tech companies three weeks before and three weeks after the policy went into effect. Reports of child sexual exploitation within the EU fell by 46 percent. No doubt other factors could have contributed to the decrease, but such a dramatic fall seems to be a consequence of the EU policy.

And also, bear in mind that as great as it would be if it happened, a decrease in reports does not necessarily mean there is less abuse.

“Every second and every child counts”

“Privacy is at the heart of the most basic understanding of human dignity,” according to UNICEF, and child sexual abuse and image-based sexual abuse are among the most extreme violations that try to destroy that dignity.

Digital privacy is important. Protecting children from online sexual abuse and preventing images of abuse from spreading across the internet is also important. When legislation comes down to choosing between the two, there will be a problem. What will have to be compromised in order to protect children?

Some companies including Google, LinkedIn, Microsoft, Roblox, and Yubo agreed and released a statement that they will “remain steadfast in honoring [their] safety commitments” by continuing to scan and report exploitation. It is unclear what could happen to companies that continue to do so. Will they be punished?

Private companies play a key role alongside governments and international organizations in the fight against online exploitation. No single group can eradicate abuse on its own. These companies are one line of defense, but efforts to protect children will be worse off without their help.

Privacy advocates make some good points about being wary of big tech companies scanning private communications. Some call it a “slippery slope” that could lead to more invasive actions, but for Maud de Boer Buquicchio, President of Missing Children Europe, child protection is more important than most things.

“We cannot let privacy law prevail over the need to scan and take down content of abuse of children,” she said. “Every second and every child counts.”

Click here to learn how to report child sexual abuse material.

Your Support Matters Now More Than Ever

Most kids today are exposed to porn by the age of 12. By the time they’re teenagers, 75% of boys and 70% of girls have already viewed itRobb, M.B., & Mann, S. (2023). Teens and pornography. San Francisco, CA: Common Sense.Copy —often before they’ve had a single healthy conversation about it.

Even more concerning: over half of boys and nearly 40% of girls believe porn is a realistic depiction of sexMartellozzo, E., Monaghan, A., Adler, J. R., Davidson, J., Leyva, R., & Horvath, M. A. H. (2016). “I wasn’t sure it was normal to watch it”: A quantitative and qualitative examination of the impact of online pornography on the values, attitudes, beliefs and behaviours of children and young people. Middlesex University, NSPCC, & Office of the Children’s Commissioner.Copy . And among teens who have seen porn, more than 79% of teens use it to learn how to have sexRobb, M.B., & Mann, S. (2023). Teens and pornography. San Francisco, CA: Common Sense.Copy . That means millions of young people are getting sex ed from violent, degrading content, which becomes their baseline understanding of intimacy. Out of the most popular porn, 33%-88% of videos contain physical aggression and nonconsensual violence-related themesFritz, N., Malic, V., Paul, B., & Zhou, Y. (2020). A descriptive analysis of the types, targets, and relative frequency of aggression in mainstream pornography. Archives of Sexual Behavior, 49(8), 3041-3053. doi:10.1007/s10508-020-01773-0Copy Bridges et al., 2010, “Aggression and Sexual Behavior in Best-Selling Pornography Videos: A Content Analysis,” Violence Against Women.Copy .

From increasing rates of loneliness, depression, and self-doubt, to distorted views of sex, reduced relationship satisfaction, and riskier sexual behavior among teens, porn is impacting individuals, relationships, and society worldwideFight the New Drug. (2024, May). Get the Facts (Series of web articles). Fight the New Drug.Copy .

This is why Fight the New Drug exists—but we can’t do it without you.

Your donation directly fuels the creation of new educational resources, including our awareness-raising videos, podcasts, research-driven articles, engaging school presentations, and digital tools that reach youth where they are: online and in school. It equips individuals, parents, educators, and youth with trustworthy resources to start the conversation.

Will you join us? We’re grateful for whatever you can give—but a recurring donation makes the biggest difference. Every dollar directly supports our vital work, and every individual we reach decreases sexual exploitation. Let’s fight for real love: