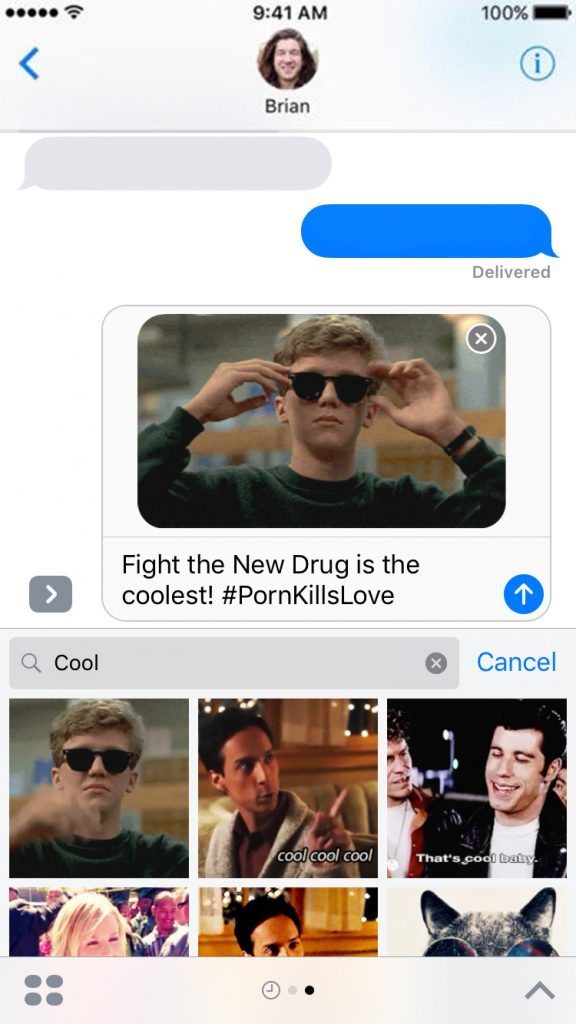

While the whole world is buzzing about the new release of Apple’s iPhone 7 this September 2016, and all of its incredible features, the new operating system was unrolled this past week as well. iPhone users who update to iOS 10 can now enjoy many amazing new features, including a redesigned iMessage platform that allows for new emojis, GIFs, and reactions to text messages.

One of the most highly-anticipated features of iOS 10 is a new GIF keyboard that allows you to post GIFs directly into iMessage, which pulls in GIFs from a variety of outside sources. For example:

But as with any instance of pulling images from third-party sources, there are bound to be a few inappropriate ones that slip through the cracks, and iOS 10’s GIF search is no different. Somehow, hardcore porn clips started popping up in people’s conversations.

As first reported by Deadspin, if you typed a certain word into the GIF search it would lead you to a cartoon image that was sexually suggestive. Deadspin says that Apple immediately fixed the one issue but the iOS 10 Porn problem persisted. Later, users were finding that if they searched another word in the GIF keyboard that is seemingly innocent, they found an extremely NSFW image of a hardcore sex act.

One woman told The Verge that the porn problem led to an embarrassing situation with her eight-year-old daughter trying to send a message to her dad. She was presented with “a very explicit image” and the mother “grabbed the phone from her immediately.” She added, “I typed in the word, which isn’t sexual in any nature. It’s just a word, not like butt or anything else,” she added.

The mother says her daughter is fine — “she had no idea” — but she’s concerned about the possibility of other kids being accidentally exposed to porn through what’s supposed to be a goofy feature. “My daughter uses it because there’s cartoons and fart jokes, that kind of stuff,” she told Verge. “That’s hardcore porn. People making out she might see on ABC. That’s something that could potentially be pretty traumatizing for a small child.”

These instances are bad news for Apple who has long been particularly strict with sexual content. Legendary Apple founder Steve Jobs said in an email exchange with a customer in 2011, that he believed he had a “moral responsibility” to reject pornographic content on Apple products. He famously wrote, “Folks who want porn can buy an Android.”

Jobs also defended his stance against a critique from a magazine writer who objected to an Apple commercial calling the iPad a revolution, while banning porn in the App Store. ”Revolutions are about freedom,” the journalist wrote. Jobs responded that Apple products offer users freedom from porn, and told the writer that he might care more about porn when he had children.

Apple’s App Store guidelines are very clear about pornography: Apps containing pornographic material, defined by Webster’s Dictionary as “explicit descriptions or displays of sexual organs or activities intended to stimulate erotic rather than aesthetic or emotional feelings”, will be rejected.

Apps that contain user generated content that is frequently pornographic (ex. “Chat Roulette” Apps) will be rejected.

Apple has yet to publicly respond to the iOS 10 porn search issue but they did get to work quickly to solve the problem. It fixed the first search issue within 10 hours and the more explicit term sooner after.

Normalize not watching porn

In a society where porn has become so normalized and mainstream, it is so easy for hardcore porn to pop up when we are least expecting. This may seem harmless or even funny to some who don’t realize the harmful effects of porn, but the long-term effects of an exposure to hardcore porn, especially on children, can be very damaging. The majority of people who message us for help with their struggle with pornography tell us a story of how they were first exposed between the ages of 8-12. It is usually by accident and it is almost aways traumatizing.

These shocking images to a young mind usually lead them back for more and down a road that can turn into a lifelong addiction. While Apple is not entirely at fault for third-party images, this issue shows a much larger problem in our society that we need to speak up about.

Your Support Matters Now More Than Ever

Most kids today are exposed to porn by the age of 12. By the time they’re teenagers, 75% of boys and 70% of girls have already viewed itRobb, M.B., & Mann, S. (2023). Teens and pornography. San Francisco, CA: Common Sense.Copy —often before they’ve had a single healthy conversation about it.

Even more concerning: over half of boys and nearly 40% of girls believe porn is a realistic depiction of sexMartellozzo, E., Monaghan, A., Adler, J. R., Davidson, J., Leyva, R., & Horvath, M. A. H. (2016). “I wasn’t sure it was normal to watch it”: A quantitative and qualitative examination of the impact of online pornography on the values, attitudes, beliefs and behaviours of children and young people. Middlesex University, NSPCC, & Office of the Children’s Commissioner.Copy . And among teens who have seen porn, more than 79% of teens use it to learn how to have sexRobb, M.B., & Mann, S. (2023). Teens and pornography. San Francisco, CA: Common Sense.Copy . That means millions of young people are getting sex ed from violent, degrading content, which becomes their baseline understanding of intimacy. Out of the most popular porn, 33%-88% of videos contain physical aggression and nonconsensual violence-related themesFritz, N., Malic, V., Paul, B., & Zhou, Y. (2020). A descriptive analysis of the types, targets, and relative frequency of aggression in mainstream pornography. Archives of Sexual Behavior, 49(8), 3041-3053. doi:10.1007/s10508-020-01773-0Copy Bridges et al., 2010, “Aggression and Sexual Behavior in Best-Selling Pornography Videos: A Content Analysis,” Violence Against Women.Copy .

From increasing rates of loneliness, depression, and self-doubt, to distorted views of sex, reduced relationship satisfaction, and riskier sexual behavior among teens, porn is impacting individuals, relationships, and society worldwideFight the New Drug. (2024, May). Get the Facts (Series of web articles). Fight the New Drug.Copy .

This is why Fight the New Drug exists—but we can’t do it without you.

Your donation directly fuels the creation of new educational resources, including our awareness-raising videos, podcasts, research-driven articles, engaging school presentations, and digital tools that reach youth where they are: online and in school. It equips individuals, parents, educators, and youth with trustworthy resources to start the conversation.

Will you join us? We’re grateful for whatever you can give—but a recurring donation makes the biggest difference. Every dollar directly supports our vital work, and every individual we reach decreases sexual exploitation. Let’s fight for real love: