The dark web.

It’s a hidden internet hotbed of criminal activity that everyday search engines like Google and Yahoo don’t give the average internet user access to.

On it, a “lifetime” Netflix account goes for around $6, while $3,000 in counterfeit $20 bills goes for only $600. One could also use it to buy seven preloaded debit cards worth $17,500 total for $500 (and that includes express shipping!). Additionally, purchasing the login credentials of a $50,000 Bank of America account only costs $500.

Netflix accounts, hacking software, credit card numbers, prescription and illicit drugs, guns, counterfeit money, porn and so much more—it’s all there. But people trade a lot more than stolen passwords and fake cash on the platform.

According to some reports, 44% of all porn-related searches on the file-sharing, or P2P, networks that facilitate the dark web’s operation involve child abuse images—known commonly as child porn.

Related: How To Report Child Porn If You Or Someone You Know Sees It Online

Now, because violations of the law as they pertain to child abuse images lead to severe statutory penalties, including steep fines and up to life imprisonment, the material has always been seemingly impossible to find on the publicly visible part of the internet, or “open web.”

Unfortunately, that’s all changing.

Child exploitation is being facilitated in ways that are unconnected to the dark web

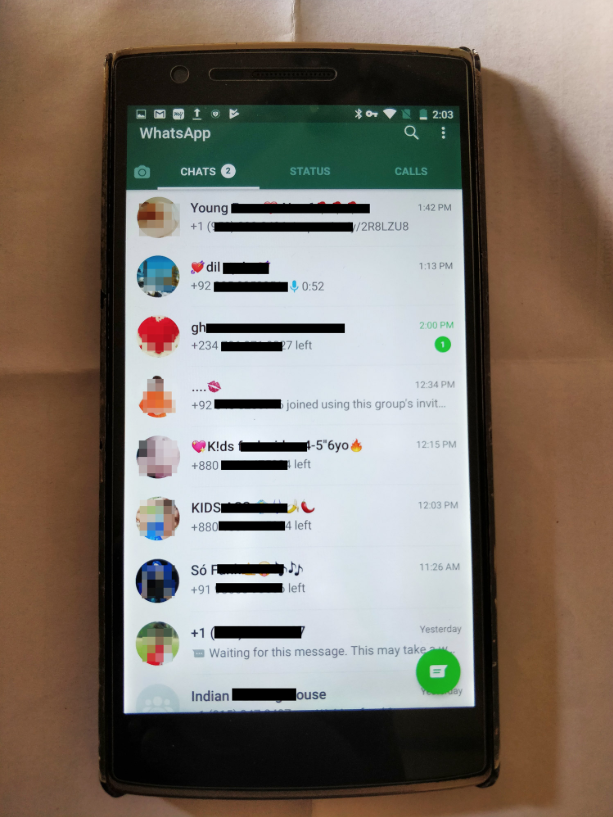

In a recent article by TechCrunch, publisher of tech industry news, summarized the findings of two Israeli NGOs. The article “details how WhatsApp chat groups are being used to spread illegal child pornography.”

If you haven’t heard of WhatsApp, it’s an ultra-popular cross-platform messaging and calling application owned by Facebook that utilizes privacy technology.

More specifically, end-to-end encryption, as the privacy technology “ensures only you and the person you’re communicating with can read what’s sent and nobody in between, not even WhatsApp. Your messages are secured with locks, and only the recipient and you have the special keys needed to unlock and read your messages.”

To put it simply, end-to-end encryption allows people to share child abuse images in a way that leaves them and their messaging threads anonymous to the rest of the world—meaning they can abuse children and distribute the material with others to use without getting caught.

After the release of TechCrunch’s story, the founder and project manager at Cyber Peace Foundation, Nitish Chandan, checked if the proliferation of child porn through WhatsApp was also a big problem in India.

The results of his initial search were not pretty.

In a four-day search, he found over 50 WhatsApp groups where hundreds of Indian users were subscribing and sending child sexual abuse material without any accountability put in place.

How the chat groups are being run

Getting into [child porn chat groups] is simple if you don’t care about the consequences of getting caught, according to reports.

Apparently, it’s so simple that anyone with a WhatsApp account and an internet connection could join in a few clicks, if they knew what to look for.

For most applications, a moderation team exists for this reason alone: to fight the spread of illicit content, such as child porn.

Facebook has a 20,000 person team that polices the social network’s 2.27 billion users. Unfortunately, while Facebook owns WhatsApp, its own moderation team does not moderate WhatsApp-related content.

Related: Investigation Reveals Sharp Rise In Hidden Links To Child Porn On Adult Porn Sites

Instead, WhatsApp operates “semi-independently,” leaving its own 300 employees in charge of a 1.5 billion-user community. Sadly, but understandably, they have not had the resources necessary to stop illicit content distribution on the platform.

Not only has this allowed thousands upon thousands of groups to share the illegal material unchecked, but it has also allowed the WhatsApp child porn groups to monetize and sustain themselves by running Google and Facebook’s ad networks.

Why this matters

Thankfully, WhatsApp has begun responding, banning over 130,000 accounts in a recent 10-day period for violating its policies against child exploitation.

That’s good news because if porn performing is harmful for adult performers, even those who choose to perform, imagine how much more so it is for a child who is forced to perform by predators and traffickers.

Take it from the experience of Elizabeth Smart, a child abduction and rape survivor. She stated that the abuse she faced at the hands of her captor made her life a “living hell.” Watch her exclusive Fight the New Drug interview, here:

Thankfully, WhatsApp responded forcefully to the facts that TechCrunch’s story brought to light: “WhatsApp has a zero-tolerance policy around child sexual abuse. We deploy our most advanced technology, including artificial intelligence, to scan profile photos and images in reported content, and actively ban accounts suspected of sharing this vile content.”

But WhatsApp didn’t stop there. Instead, they also state that the fight would not simply end with the creation of a “zero-tolerance policy,” but requires that “…technology companies must work together to stop [the spread of child porn].”

Related: Porn-Sniffing Dogs: New Police K-9’S Trained To Find Devices Containing Child Porn

In other words, fighting the exploitation of children is not a solo mission for WhatsApp or for technology companies alone. It’s a fight that demands that we, too, raise our voices, in partnership with technology companies, against the unparalleled abuse and exploitation children around the world are facing.

It will take countless individuals willing to speak out and report content whenever they see it, as well as technology companies like WhatsApp speaking out for those who do not have the ability to do so for themselves. Will you join us?